STATA

STATA comprises a complete software package, offering statistical tools for data analysis, data management and graphics. On the local HPC System we offer a multiprocessor variant of STATA/MP 13, licensed for up to 12 cores. The license allows up to 5 users to work with STATA at the same time. STATA/MP uses the paradigm of symmetric multiprocessing (SMP) to benefit from the parallel capabilities offered by many modern computers and HPC systems to speed up computations.

Accessing the HPC System

If you are not used to work in such an environment, it might first feel somewhat intricate and long-winded. However, experiences tells that in general the learning curve in using such environments is quite steep and you will soon become more efficient in using it.

Logging in to the HPC System

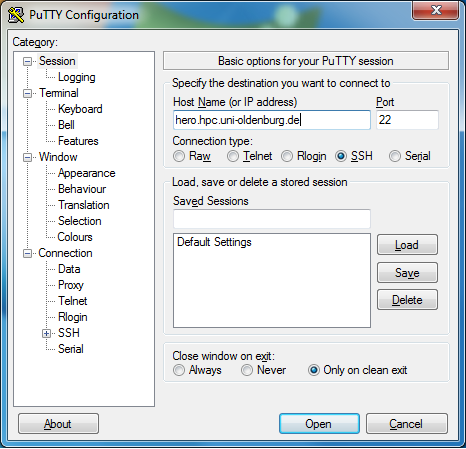

Advice on how to login to the HPC System from either within or outside the University can be found here. If you use a Windows desktop machine, a comfortable way to login to the HPC system is the PuTTY client. A guide on where to download the freely available putty software and how to use it in order to access, say, a Unix environment can be found here. Once you downloaded the putty software (basically you will only need the binary for the secure shell, called PuTTY.exe) you might follow the steps discussed in the paragraph on "Launch PuTTY and configure for the target system" in Sect. "1. Installation and config" found under the above link. Then, in the section where you are asked to specify the destination you want to connect to, provide the following information

Host Name: hero.hpc.uni-oldenburg.de Port: 22 Connection type: SSH

Below the PuTTY Client interface for the login procedure is shown:

Commonly used Unix commands

On the HPC system you need to use a command line interface to navigate within your home directory and to submit your actual jobs. A list of useful unix-commands and a brief tutorial on how to use the commands are listed here.

Editing text files

From time to time you might want to edit a file, located somewhere in your HPC homedirectory. For this purpose you need to use some text editor. An easy to learn and simple text editor, available on the HPC system is, e.g., the editor nano.

Loading the STATA module

On the HPC system, the STATA/MP 13 software package is available as a software module. In order to load the respective module just type

module load stata

Then you can find the following STATA variants in your user environment:

- stata: a version of STATA that handles small datasets

- stata-se: a version of STATA for large datasets

- stata-mp: a fast version of STATA for multicore/multiprocessor machines

More details on the different version can be found here

Using STATA on the HPC system

To facilitate bookkeeping, a good first step towards using STATA on the HPC system is to create a directory in which all STATA related computations are carried out. Using the command

mkdir stata

you might thus create the folder stata in the top level of your home directory for this purpose (you might even go further and create a subdirectory mp13 specifying the precise version of STATA).

Using STATA in batch mode

On the local HPC system the convention is to use applications in batch mode rather than interactive mode as you would do on your local workstation. This requires you to list the commands you would otherwise interactively type in STATAs interactive mode in a file, called do-file in STATA jargon, and to call STATA in conjunction with the -b option on that do-file. To illustrate how to use STATA in batch mode on the HPC system, consider the basic linear regression example contained in Chapter 1 of the STATA Web Book Regression with STATA. For this linear regression example you might further create the subdirectory linear_regression and put the data sets on which you would like to work and all further supplementary files and scripts there. A do-file corresponding to the basic lienear regression example, here called linReg.do, reads:

use elemapi regress api00 acs_k3 meals full

For the do-file to run properly, the data file available as http://www.ats.ucla.edu/stat/stata/webbooks/reg/elemapi needs to be stored in the directory linear_regression. Further, if you did not load the STATA module yet, you need to load it via

module load stata

before you attempt to use the STATA application. In principle you could now call STATA in batch mode by typing

stata -b linReg.do

Albeit this is fully okay for small test programs that consume only few resources (in terms of running time and memory), the convention on the HPC system rather is to submit your job to the scheduler (here we use Sun grid engine (SGE) as scheduler) which assigns it to a proper execution host on which the actual computations are carried out. Therefore you have to setup a job submission file by means of which you allocate certain resources for your job. This is common practice on HPC systems on which multiple users access the available resources at a given time. Examples of such job submission scripts for both, single-core and multi-core usage, are detailed below.

Submitting a job: Single-slot variant

You might submit your STATA do-file using a job submission script similar to the script mySubmissionScript.sge listed below (with annotated line-numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l h_rt=0:10:0

7 #$ -l h_vmem=300M

8 #$ -l h_fsize=100M

9 #$ -N stata_linReg_test

10

11 module load stata

12 /cm/shared/apps/stata/13/stata -b linReg.do

13 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Therein, in lines 6 through 8 the job requirements in terms of the resources running-time (h_rt), memory (h_vmem) and scratch space (h_fsize) are allocated. These and further resource allocation statements used in the job submission script are explained here. In line 9 a name for the job is set. The module containing the STATA software package is loaded in line 11. You need to load this module in each job submission script which is used to submit STATA jobs. In line 12 the STATA program is called in batch mode and the do-file is supplied (here the linear regression example do-file linReg.do set up previously). As a detail, note that the absolute path to the STATA executable is provided (you can verify the path by simply typing which stata on the command line after the respective module is loaded). By default, STATA creates a log file with a standardized name. Here, for the do-file linReg.do, STATA will create the default log file linReg.log. In case you want to call the underlying do-file several times, your results will be overwritten time after time. So it might be of use to change the standard log file name to include the actual name of the job and the unique job-Id assigned by the scheduler as is done in line 13. You can submit the script by simply typing

qsub mySubmssionScript.sge

As soon as the job is enqueued you can check its status by typing qstat on the commanline. Immediately after submission you might obtain the output

job-ID prior name user state submit/start at queue slots ja-task-ID --------------------------------------------------------------------------------------------------------- 909537 0.00000 stata_linR alxo9476 qw 09/02/2013 12:45:41 1

According to this, the job with ID 909537 has priority 0.00000 and resides in state qw, loosely translated to "enqueued and waiting". Also, the above output indicates that the job requires a number of 1 slots. The column for the ja-task-ID, referring to the id of the particular task stemming from the execution of a job array (we don't work through a job array since we submitted a single job), is actually empty. Soon after, the priority of the job will take a value in between 0.5 and 1.0 (usually only slightly above 0.5), slightly increasing until the job starts. In case the job already finished, it is possible to retrieve information about the finished job by using the qacct commandline tool, see here.

After the job has terminated successfully, the STATA log file stata_linReg_test_jobId909537_linReg.log is available in the directory from which the job has been submitted from. It contains a log of all the commands used in the STATA session and a summary of the linear regression carried out therein. Further, the directory contains the two files stata_linReg_test.o909537 and stata_linReg_test.e909537, containing additional output to the standard outstream and errorstream, respectively.

Submitting a job: Multi-slot variant

On the local HPC system, the concept of slots is used over "cores", and hence, the title of this subsection refers to the "Multi-slot" variant of using STATA. So as to benefit from the parallel capabilities offered by many modern computers and HPC systems and to speed up computations, STATA/MP uses the paradigm of symmetric multiprocessing (SMP). A performance report for a multitude of commands implemented in the STATA software package, highlighting the benefit of multiprocessing, can be found here. As pointed out above, the HPC system offers STATA/MP 13, licensed for up to 12 cores.

A proper job submission script by means of which you can use the multiprocessing capabilities of STATA/MP, here called mySubmissionScript_mp.sge, is listed below (with annotated line numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l h_rt=0:10:0

7 #$ -l h_vmem=500M

8 #$ -l h_fsize=1G

9 #$ -N stata_linReg_test_smp

10

11 #$ -pe smp 3

12 #$ -R y

13

14 export OMP_NUM_THREADS=$NSLOTS

15 module load stata

16 /cm/shared/apps/stata/13/stata-mp -b linReg.do

17 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Note that in comparison to the single-slot submission script listed in the preceding subsection, you need to specify some more things! First, in line 11 the SMP parallel environment (PE) is requested and 3 slots are reserved. The option -R y in line 12 ensures that resource reservation is enabled in order to avoid starving of parallel jobs by serial jobs which "block" required slots on specific hosts. All further resource allocation statements used in the job submission script are explained here. At this point, note that the SMP parallel environment uses environment variables to controll the execution of parallel jobs at runtime. However, setting the SMP environment variables is as easy as setting other environment variables and it depends on which shell you are actually using. Above we specified a bash shell, thus, setting a value for the environment variable OMP_NUM_THREADS is done via

export OMP_NUM_THREADS=$NSLOTS

as can be seen in line 14. Also note that here, the multiprocessing variant stata-mp of the STATA program is called in line 16. Now, typing

qsub mySubmissionScript_mp.sge

enqueues the job, assigning the jobId 909618 in this case. As soon as the job is in state running, one can get an idea of where the parallel threads are running. In this question the query qstat -g t (which lists where exactly the job is running) yields

job-ID prior name user state submit/start at queue master ja-task-ID

----------------------------------------------------------------------------------------------------------

909618 0.50535 stata_linR alxo9476 r 09/02/2013 16:27:29 mpc_std_shrt.q@mpcs101 MASTER

mpc_std_shrt.q@mpcs101 SLAVE

mpc_std_shrt.q@mpcs101 SLAVE

mpc_std_shrt.q@mpcs101 SLAVE

Note that it is not a coincidence that all subprocess are running on the same execution host (the host mpcs101 in this case). The SMP parallel environment is somewhat special: it requires all the requested slots to be available on a single execution host, see here. A single execution host offers 12 slots, which perfectly fits the 12 core version of STATA/MP the HPC system offers. However, note that there are many users working on HERO at the same time. Upon submission you hand the job over to the scheduler which determines an execution host on which your job will be executed. In cases where the cluster is used extensively it might take some time until the resources you specified are finally available and a proper host for the execution of your job is free. Until then, the job will reside in the queue. Typically, the more slots you allocate the longer it will take until your job finally starts. Hence, it might be a good idea to not always request 12 slots for the execution of your job (i.e. an entire execution host), but a number lower than that which still lets you benefit from the multiprocessing capabilities of STATA/MP.

During the execution of your program, a stata log file (here:stata_linReg_test_smp_jobId909618_linReg.log) and four other log files are created: stata_linReg_test_smp.o909618 and stata_linReg_test_smp.e909618 to store job related data sent to standard out stream and error stream, as well as stata_linReg_test_smp.po909618 and stata_linReg_test_smp.pe909618 specifying output and errorlog for the startup of the parallel environment. The latter two files are of importance only if the job does not start up priorly and the reason is related to the start up of the parallel environment.

Note that in principle, there is a small time-overhead related to the start up of the parallel environment. Hence, for a job that finishes fast anyway, as, e.g. the basic linear regression example above, the use of the multiprocessing variant of STATA might not pay off.

Satisfying extended resource requirements

If you submit a STATA job using the job submission scripts from the preceding subsections, the scheduler assigns the job to one of the standard nodes on HERO. The total amount of memory available on these nodes is limited to 23G. Note that by using one of the job submission scripts shown above your job will only run if

- the specified running time h_rt is smaller than 192h, i.e. 8 days. If your job needs to run longer, you need to explicitly specify this, see here.

- the overall amount of memory, i.e. the number of requested slots times h_vmem (which is requested per slot), is smaller than 23G. If your job needs an overall amount of memory which exceeds 23G, you need to request one of the big nodes as execution host, see here.

In principle, all possible resource allocation statements are summarized here. How your job submission script (either sinlge-slot or multi-slot variant thereof) should look like in order to cope with extended resource requirements for the particular cases of long running times and high memory consumption is outlined subsequently.

Extensive running time

If your job is expected to run longer than 192h (i.e. 8 days) you need to tell the scheduler that it is an especially long running job. Therefore you need to set the flag longrun=True. The corresponding single-slot variant of the job submission script, here called mySubmissionScript_longrun.sge then should look similar to the one listed below (with annotated line numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l longrun=True

7 #$ -l h_rt=200:0:0

8 #$ -l h_vmem=300M

9 #$ -l h_fsize=100M

10 #$ -N stata_linReg_test_long

11

12 module load stata

13 /cm/shared/apps/stata/13/stata -b linReg.do

14 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Note that, in order to indicate a job with an extended running time, the longrun flag is set to True in line 6.

Correspondingly, the multi-slot variant of the job submission script, here called mySubmissionScript_longrun_smp.sge should look similar to (with annotated line numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l longrun=True

7 #$ -l h_rt=200:0:0

8 #$ -l h_vmem=500M

9 #$ -l h_fsize=1G

10 #$ -N stata_linReg_test_longrun_smp

11

12 #$ -pe smp_long 3

13 #$ -R y

14

15 export OMP_NUM_THREADS=$NSLOTS

16 module load stata

17 /cm/shared/apps/stata/13/stata-mp -b linReg.do

18 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Again, note the longrun flag in line 6 and further, note that the proper name of the parallel environment in line 12 now reads smp_long.

High memory consumption

If the overall amount of memory your job is expected to consume, i.e. the number of requested slots times h_vmem (with is requested per slot), exceeds 23G, or if your job requires more than 800G scratch space, your job cannot run on a standard node on HERO. In such a situation you need to request a big node as execution host. Therefore you need to set the flag bignode=True. The corresponding single-slot variant of the job submission script, here called mySubmissionScript_bignode.sge then should look similar to the one listed below (with annotated line numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l bignode=True

7 #$ -l h_rt=0:10:0

8 #$ -l h_vmem=30G

9 #$ -l h_fsize=100M

10 #$ -N stata_linReg_test_big

11

12 module load stata

13 /cm/shared/apps/stata/13/stata -b linReg.do

14 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Note that, in order to indicate a job with an extended memory requirement (here a single-slot job requesting 30G memory), the bignode flag is set to True in line 6.

Correspondingly, the multi-slot variant of the job submission script, here called mySubmissionScript_bignode_smp.sge should look similar to (with annotated line numbers):

1 #!/bin/bash

2

3 #$ -S /bin/bash

4 #$ -cwd

5

6 #$ -l bignode=True

7 #$ -l h_rt=0:10:0

8 #$ -l h_vmem=5G

9 #$ -l h_fsize=10G

10 #$ -N stata_linReg_test_longrun_smp

11

12 #$ -pe smp 6

13 #$ -R y

14

15 export OMP_NUM_THREADS=$NSLOTS

16 module load stata

17 /cm/shared/apps/stata/13/stata-mp -b linReg.do

18 mv linReg.log ${JOB_NAME}_jobId${JOB_ID}_linReg.log

Again, note the bignode flag in line 6. Here, a 6-slot job with 5G per slot was requested. Finally, note that you can also combine the resource requirements bignode and longrun. Thereby, keep in mind that for the latter the proper name of the parallel environment is smp_long.

Checking the status of a job

After you submitted a job, the scheduler assigns it a unique job-ID. You might then use the qstat tool in conjunction with the job-ID to check the current status of the respective job. Details on how to check the status of a job can be found here. In case the job already finished, it is possible to retrieve information about the finished job by using the qacct tool, see here.

Mounting your home directory on Hero

Consider a situation where you would like to transfer a large amount of data to the HPC System in order to analyze it via STATA. Similarly, consider a situation where you would like to transfer lots of already processed data from your HPC account to your local workstation. Then it is useful to mount your home directory on the HPC System in order to conveniently cope with such a task. Details about how to mount your HPC home directory can be found here.

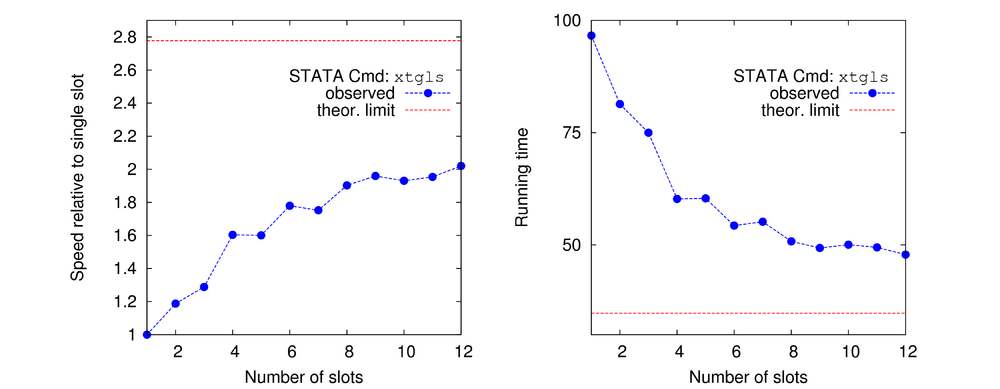

Benchmark for the particular command xtgls

A very nice feature of the STATA/MP software package is that it is very well documented. In this regard, a document exhaustively addressing the issue of how well the running time of a particular STATA command scales by using an increasing number of slots (or cores, for that matter) can be found here. Below a benchmark test of the STATA command xtgls, used to fit panel-data linear models using feasible generalized least squares (for further explanation, see here), carried out on the local HPC system for a big set of input data is presented.

To estimate the running time of the single slot variant of the corresponding job the STATA variant stata-se is used (since the problem instance is rather large). For all the multi-slot jobs, the variant stata-mp is used. For comparison, consider the documentation of the behavior of the xtgls command under parallel usage on page 33 and illustrated as Fig. 416 on page 139 of the above mentioned document. Note that therein, a different data set was considered for the benchmark. Also note that not all of the commands implemented in STATA are parallelizable to the same extend. A measure for this is the percentage of parallelization, which, according to the above mentioned document, amounts to 64% for the xtgls command. This sets an upper bound on the speedup that can theoretically be gained by using multiple cores. In case of the xtgls command, this theoretically maximal speedup is about 2.8. However, note that the percentage of parallelization is an experimentally observed quantity, assessed for the particular data set used by the STATA stuff.

Below, the running times (in seconds) of the xtgls command as function of the number of used slots is tabulated. Note that these numbers are listed as observed on the local HPC system for a different data set than used by the STATA stuff. This should be taken under consideration when comparing the speedup to the theoretical upper limit.

| Number of slots | Running time (sec.) | Speedup |

|---|---|---|

| 1 | 5798 (96 min) | 1.0 |

| 2 | 4882 (81 min) | 1.19 |

| 3 | 4500 (75 min) | 1.29 |

| 4 | 3615 (60 min) | 1.6 |

| 5 | 3622 (60 min) | 1.6 |

| 6 | 3258 (54 min) | 1.78 |

| 7 | 3309 (55 min) | 1.75 |

| 8 | 3047 (50 min) | 1.9 |

| 9 | 2959 (49 min) | 1.96 |

| 10 | 3003 (50 min) | 1.93 |

| 11 | 2967 (49 min) | 1.95 |

| 12 | 2870 ( 47 min) | 2.02 |

Further, the running time and the speedup relative to the single slot job are illustrated in the subsequent figures:

The speedup for the xtgls command relative to the single slot job found here attains its maximal value of approximately 2 for the maximally allowed number of 12 slots. Albeit this seems to be inferior compared to the ideal speedup, where doubling the number of slots would double the speedup, it is characteristic for applications that can only be parallelized up to some extend. Here, as quoted in the documentation of the STATA/MP commands, the maximal speedup that might theoretically be expected in the limit of an infinite number of slots is about 2.8. Note that there are other commands in the STATA software package that behave much better under parallelization than xtgls. However, there also ones that parallelize much worse.