Partitions

Introduction

The basic resource in Slurm for computations is a compute node. Compute nodes are organized into partitions, which are simply logical sets of compute nodes. Partitions may also overlap, and typically but not always they correspond to specific node configurations.

Partitions define limitations that restrict the resources that can be requested for a job submitted to that partition. The limitations affect the maximum run time, the amount of memory, and the number of available CPU cores (which are called CPUs in Slurm). In addition, partitions may also define default resources that are automatically allocated for jobs if nothing has been specified.

Jobs should be submitted to the partition that best matches the required resources. For example, a job that requires 50G of RAM should better be submitted to a partition that offers a RAM limit of 117G than to a partition that offers a RAM limit of 495G. That way, as few resources as possible are blocked and another user with a higher demand in RAM can run a job earlier. Of course, other considerations may also influence the choice of a partition.

Summary of Available Partitions

CARL and EDDY have several partitions available, and most of them are directly connected to a node type (which may differ in the number of CPU cores, the amount of RAM, and so on). Exceptions are the partitions carl.p and eddy.p, which serve as default partitions and combine nodes of different types. The following table gives an overview of the available partitions and their resource limits.

| CARL | |||||||

|---|---|---|---|---|---|---|---|

| Partition | Node Type | Node Count | CPU Cores | Default RunTime | Default Memory per Core | Max Memory per Node | Misc |

| mpcs.p | MPC-STD | 158 | 24 | 2h | 10 375M | 243G | |

| mpcl.p | MPC-LOM | 128 | 24 | 5 000M | 117G | ||

| mpcb.p | MPC-BIG | 30 | 16 | 30G | 495G | GTX 1080 (4 nodes á 2 GPUs) | |

| mpcp.p | MPC-PP | 2 | 40 | 50G | 1975G | ||

| mpcg.p | MPC-GPU | 9 | 24 | 10 375M | 243G | 1-2x Tesla P100 GPU | |

| carl.p | Combines mpcl.p and mpcs.p, defaults are as for mpcl.p | ||||||

| EDDY | |||||||

| cfdl.p | CFD-LOM | 160 | 24 | 2h | 2 333M | 56G | |

| cfdh.p | CFD-HIM | 81 | 24 | 5 000M | 117G | ||

| cfdg.p | CFD-GPU | 3 | 24 | 10G | 243G | 1x Tesla P100 GPU | |

| eddy.p | Combines cfdl.p and cfdh.p, defaults are as for cfdl.p | ||||||

The default time is used if a job is submitted without specifying a maximum runtime with the option --time. Likewise, a job submitted without one of the options --mem or --mem-per-cpu allocated automatically the default memory per requested core.

Getting Information about the Partitions on CARL and EDDY

To get more detailed information about a partition and its resource limits, you can use the command scontrol:

$ scontrol show part carl.p PartitionName=carl.p AllowGroups=carl,hrz AllowAccounts=ALL AllowQos=ALL AllocNodes=ALL Default=NO QoS=N/A DefaultTime=02:00:00 DisableRootJobs=YES ExclusiveUser=NO GraceTime=0 Hidden=NO MaxNodes=UNLIMITED MaxTime=21-00:00:00 MinNodes=1 LLN=NO MaxCPUsPerNode=24 Nodes=mpcl[001-128],mpcs[001-158] PriorityJobFactor=1 PriorityTier=1 RootOnly=NO ReqResv=NO OverSubscribe=NO PreemptMode=OFF State=UP TotalCPUs=6864 TotalNodes=286 SelectTypeParameters=NONE DefMemPerCPU=5000 MaxMemPerNode=120000

The SLURM job scheduler can also provide information about the current status of the cluster and the partitions using the command sinfo. Since we have quite a few partitions, it is a good idea to add an option to only view the information about a specific partitions:

$ sinfo -p carl.p PARTITION AVAIL TIMELIMIT NODES STATE NODELIST carl.p up 21-00:00:0 2 mix$ mpcl032,mpcs112 carl.p up 21-00:00:0 4 drain* mpcl[076,106,113],mpcs019 carl.p up 21-00:00:0 132 mix mpcl[006-009,013,016,018,020,022-029,033,038-040,043,046,063-070,074-075,087-105,107,112,114-126],mpcs[001,003,005,008,018,044,055-059,063-105,113,136-139,152-158] carl.p up 21-00:00:0 143 alloc mpcl[001-005,010-012,014-015,017,019,021,030-031,034-037,041-042,044-045,047-062,071-073,077-086,108-111,127-128],mpcs[002,004,006-007,009-017,020-043,045-054,060,062,106-111,114-135,144-151] carl.p up 21-00:00:0 5 idle mpcs[061,140-143]

In the output, you can see the STATE of the nodes, which can be idle if the node is free, alloc if the node is busy, and mix if the node is busy but has free resources available. Other states can be drain or down, if the node is not available.

The command sinfo has many additional options to modify the output. Some important ones are:

- -a, --all

- Display information about all partitions. You will even see partitions that are not available for your group and hidden partitions.

- -l, --long

- Display more detailed informations about the available partitions.

- -N, --Node

- Display a list of every available node.

- -n <nodes>, --nodes=<nodes>

- Display informations about a specific node. Multiple nodes may be comma separated. You can even specify a range of nodes, e.g. mpcs[100-120].

- -O <output_format>, --Format=<output_format>

- Specify the information you want to be displayed.

- If you want to, for example, display the node hostname, the number of CPUs, the CPU load, the amount of free memory, the size of temporary disk, the size of memory per node (in megabytes) you could use the following command:

$ sinfo -O nodehost,cpus,cpusload,freemem,disk,memory HOSTNAMES CPUS CPU_LOAD FREE_MEM TMP_DISK MEMORY cfdh076 24 1.01 97568 115658 128509 . . .

- The size of each field can be modified (syntax: type[:[.]size]) to match your needs, for example like this:

$ sinfo -O nodehost:8,cpus:5,cpusload:8,freemem:10,disk:10,memory:8 HOSTNAMECPUS CPU_LOADFREE_MEM TMP_DISK MEMORY cfdh076 24 1.01 97568 115658 128509 . . .

The full list and further informations about the command sinfo can be found here: sinfo

Usage of the Partitions on CARL/EDDY

To optimize the submission, the runtime and the overall usage for everybody using the cluster, you should always specify the right partition for your jobs. Using either the carl.p- or the eddy.p-partition is always a good choice. Try to avoid using the all_nodes.p-partition. Only use it if the other partitions dont have enough nodes and the runtime of your job doesnt exceed 1 day.

Partitions on CARL/EDDY

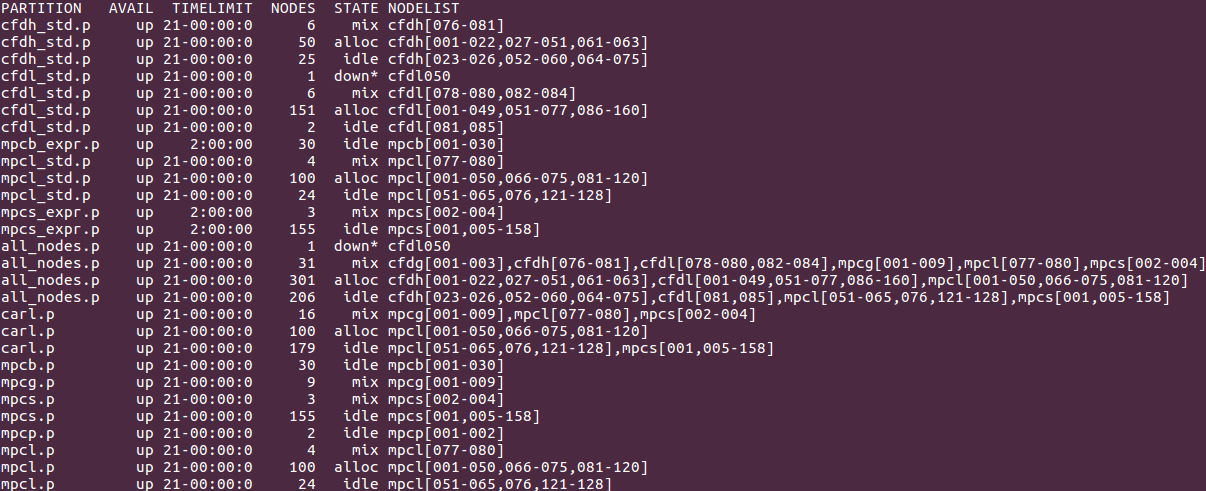

Using sinfo on CARL/EDDY will display an output like this:

Informations you can see in the screenshot described by columns:

- PARTITIONS

- Name of a partition, e.g. carl.p or eddy.p

- AVAIL

- State of the partitions (up or down)

- TIMELIMIT

- Maximum time limit for any user job in days-hours:minutes:seconds.

- NODES

- Count of nodes with this particular configuration.

- STATE

- Current state of the node. Possible states are: allocated, completing, down, drained, draining, fail, failing, future, idle, maint, mixed, perfctrs, power_down, power_up, reserved and unknown

- NODELIST

- Names of nodes associated with this configuration/partition.

The full description of the output field can be found here: output field

Using GPU Partitions

When using GPU partitions, it is mandatory to use the following GPU flag on your slurm jobs, should you need to allocate the GPUs:

--gres=gpu:1 - This will request one GPU per Node.

--gres=gpu:2 - This will request two GPUs per Node.

To learn more about submitting jobs, you might want to take a look at this page.