Difference between revisions of "WRF/WPS"

Albensoeder (talk | contribs) |

|||

| (14 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

The software WRF (''Weather Research and Forecasting Model'') is a numerical mesoscale weather prediction system. | The software WRF (''Weather Research and Forecasting Model'') is a numerical mesoscale weather prediction system. | ||

== Available real modules == | |||

On FLOW WRF/WPS is available as module. So far the WRF for em_real in single (SP) and double (DP) precision is available. The available modules can be listed by | |||

module avail wrf | |||

The modules are extended by two helper scripts | |||

setup_wps_dir.sh [DIRECTORY_NAME] | |||

and (for em_real) | |||

setup_wrf_dir.sh [DIRECTORY_NAME] | |||

which set up a directory for the preprocessing with WPS and for the simulation with WRF, respectively. The scripts create a new directory ''wps'' and ''wrf'' and copy the needed files into the directory. Optionally an other ''DIRECTORY_NAME'' can be specified. | |||

== Available ideal modules == | |||

Since WRF 3.5 there are the ideal cases | |||

em_b_wave | |||

em_heldsuarez | |||

em_les | |||

em_quarter_ss | |||

em_scm_xy | |||

em_tropical_cyclone | |||

installed. Note that WPS is not needed for these cases and therefore not included in the modules. | |||

== Private installation == | |||

Please ask for an actual installation script Stefan Harfst. | |||

== SGE script == | == SGE script == | ||

A basic script to submit WRF to SGE is shown here.... | A basic script to submit WRF to SGE is shown below: | ||

#!/bin/bash | |||

# | |||

# ==== SGE options ==== | |||

# | |||

# --- Which shell to use --- | |||

#$ -S /bin/bash | |||

# | |||

# --- Name of the job --- | |||

#$ -N MY_WRF_JOB | |||

# | |||

# --- Change to directory where job was submitted from --- | |||

#$ -cwd | |||

# | |||

# --- merge stdout and stderr --- | |||

#$ -j y | |||

# | |||

# ==== Resource requirements of the job ==== | |||

# | |||

# --- maximum walltime of the job (hh:mm:ss) --- | |||

# PLEASE MODIFY TO YOUR NEEDS! | |||

#$ -l h_rt=18:00:00 | |||

# | |||

# --- memory per job slot (= core) --- | |||

#$ -l h_vmem=1800M | |||

# | |||

# --- disk space --- | |||

# OPTIONALLY, PLEASE MODIFY TO YOUR NEEDS! | |||

##$ -l h_fsize=100G | |||

# | |||

# --- which parallel environment to use, and number of slots (should be multiple of 12) --- | |||

# PLEASE MODIFY TO YOUR NEEDS! | |||

#$ -pe impi 24 | |||

# | |||

# load module, here WRF 3.4.1 in single precision | |||

module load wrf/3.4.1/em_real/SP | |||

# Start real.exe in parallel (OPIONALLY, ONLY IF NEEDED) | |||

#mpirun real.exe | |||

# Start wrf.exe in parallel | |||

mpirun wrf.exe | |||

The job script has to be submitted from your WRF data directiory. | |||

== Scaling == | == Scaling == | ||

| Line 30: | Line 86: | ||

|- style="background-color:#ddddff;" | |- style="background-color:#ddddff;" | ||

!#Cores | !#Cores | ||

! colspan="2"|GNU compiler [s] | ! colspan="2"|GNU compiler 4.7.1 [s] | ||

! colspan="2"|Intel compiler [s] | ! colspan="2"|Intel compiler 13.0.1 [s] | ||

! colspan="2"|Intel vs. GNU | |||

|- style="background-color:#ddddff;" | |- style="background-color:#ddddff;" | ||

| | | | ||

| single precision | |||

| double precision | |||

| single precision | | single precision | ||

| double precision | | double precision | ||

| Line 44: | Line 103: | ||

| 127 | | 127 | ||

| 212 | | 212 | ||

| 2,73 | |||

| 1.37 | |||

|-align="center" | |-align="center" | ||

| 2 | | 2 | ||

| Line 50: | Line 111: | ||

| 62 | | 62 | ||

| 109 | | 109 | ||

| 2.92 | |||

| 1.39 | |||

|-align="center" | |-align="center" | ||

| 4 | | 4 | ||

| Line 56: | Line 119: | ||

| 37 | | 37 | ||

| 62 | | 62 | ||

| 2.65 | |||

| 1.42 | |||

|-align="center" | |-align="center" | ||

| 8 | | 8 | ||

| Line 62: | Line 127: | ||

| 22 | | 22 | ||

| 39 | | 39 | ||

| 2.55 | |||

| 1.31 | |||

|- | |- | ||

|} | |} | ||

| Line 71: | Line 138: | ||

{| style="background-color:#eeeeff;" cellpadding="10" border="1" cellspacing="0" | {| style="background-color:#eeeeff;" cellpadding="10" border="1" cellspacing="0" | ||

|- style="background-color:#ddddff;" | |- style="background-color:#ddddff;" | ||

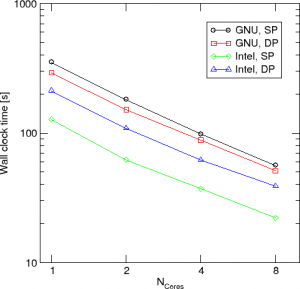

|[[ | |[[Image:scaling_wrf_jan.png|center|thumb|300px|Scaling of WRF for different compilers and precisions]] | ||

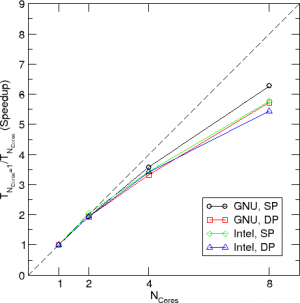

|[[ | |[[Image:speedup_wrf_jan.png|center|thumb|300px|Speedup of WRF for different compilers and precisions. Note the times are normalized by the time for one core with the same setup.]] | ||

|- | |- | ||

|} | |} | ||

Latest revision as of 09:22, 30 May 2016

The software WRF (Weather Research and Forecasting Model) is a numerical mesoscale weather prediction system.

Available real modules

On FLOW WRF/WPS is available as module. So far the WRF for em_real in single (SP) and double (DP) precision is available. The available modules can be listed by

module avail wrf

The modules are extended by two helper scripts

setup_wps_dir.sh [DIRECTORY_NAME]

and (for em_real)

setup_wrf_dir.sh [DIRECTORY_NAME]

which set up a directory for the preprocessing with WPS and for the simulation with WRF, respectively. The scripts create a new directory wps and wrf and copy the needed files into the directory. Optionally an other DIRECTORY_NAME can be specified.

Available ideal modules

Since WRF 3.5 there are the ideal cases

em_b_wave em_heldsuarez em_les em_quarter_ss em_scm_xy em_tropical_cyclone

installed. Note that WPS is not needed for these cases and therefore not included in the modules.

Private installation

Please ask for an actual installation script Stefan Harfst.

SGE script

A basic script to submit WRF to SGE is shown below:

#!/bin/bash # # ==== SGE options ==== # # --- Which shell to use --- #$ -S /bin/bash # # --- Name of the job --- #$ -N MY_WRF_JOB # # --- Change to directory where job was submitted from --- #$ -cwd # # --- merge stdout and stderr --- #$ -j y # # ==== Resource requirements of the job ==== # # --- maximum walltime of the job (hh:mm:ss) --- # PLEASE MODIFY TO YOUR NEEDS! #$ -l h_rt=18:00:00 # # --- memory per job slot (= core) --- #$ -l h_vmem=1800M # # --- disk space --- # OPTIONALLY, PLEASE MODIFY TO YOUR NEEDS! ##$ -l h_fsize=100G # # --- which parallel environment to use, and number of slots (should be multiple of 12) --- # PLEASE MODIFY TO YOUR NEEDS! #$ -pe impi 24 # # load module, here WRF 3.4.1 in single precision module load wrf/3.4.1/em_real/SP # Start real.exe in parallel (OPIONALLY, ONLY IF NEEDED) #mpirun real.exe # Start wrf.exe in parallel mpirun wrf.exe

The job script has to be submitted from your WRF data directiory.

Scaling

Here a short example (January test case, see WRF tutorial) of the scaling and wall clock times for different compiler and single (SP) and double precision (DP):

| #Cores | GNU compiler 4.7.1 [s] | Intel compiler 13.0.1 [s] | Intel vs. GNU | |||

|---|---|---|---|---|---|---|

| single precision | double precision | single precision | double precision | single precision | double precision | |

| 1 | 351 | 292 | 127 | 212 | 2,73 | 1.37 |

| 2 | 181 | 151 | 62 | 109 | 2.92 | 1.39 |

| 4 | 98 | 88 | 37 | 62 | 2.65 | 1.42 |

| 8 | 56 | 51 | 22 | 39 | 2.55 | 1.31 |

A short visualisation of the times is given below

Known issues

- The Intel compiler release 12.0.0 produces an bug in the binary ungrib.exe in the WPS-Suite. Due to this bug waves can occurs in the the preprocessed data.

- The default installation rules of WRF with single precision floating point operations leads to strong deviations in the result of WRF when changing the compiler or the WRF release. Thus WRF should be compiled with double precision floating points.