Difference between revisions of "File system and Data Management"

(→chown) |

|||

| (139 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

The HPC cluster offers access to two important file systems. In additions the compute nodes of the | |||

The HPC cluster offers access to two important file systems, namely a parallel file system connected to the communication network and an NFS-mounted file system connected via the campus network. In additions, the compute nodes of the CARL cluster have local storage devices for high I/O demands. The different file systems available are documented below. '''Please follow the guidelines given for best pratice below.''' | |||

== Storage Hardware == | == Storage Hardware == | ||

A GPFS Storage Server (GSS) serves as a parallel file system for the HPC cluster. The total (net) capacity of this file system is about 900 TB and the read/write performance is up to 17/12 GB/s over FDR Infiniband. It is possible to mount the GPFS on your local machine using SMB/NFS (via the 10GE campus network). The | A GPFS Storage Server (GSS) serves as a parallel file system for the HPC cluster. The total (net) capacity of this file system is about 900 TB and the read/write performance is up to 17/12 GB/s over FDR Infiniband. It is possible to mount the GPFS on your local machine using SMB/NFS (via the 10GE campus network). '''The GSS should be used as the primary storage device for HPC, in particular for data that is read/written by the compute nodes'''. The GSS offers no backup functionality (i.e. deleted data can not be recovered). | ||

The central storage system of IT services is used to provide the NFS-mounted $HOME-directories. The central storage system offers very high availability, snapshots, backup and should be used | The central storage system of the IT services is used to provide the NFS-mounted $HOME-directories and other directories, namely $DATA, $GROUP, and $OFFSITE. The central storage system is provided by an IBM Elastic Storage Server (ESS). It offers very high availability, snapshots, and backup and should be used for long-term storage, in particular everything that cannot be recovered easily (program codes, initial conditions, final results, ...). | ||

== File Systems == | == File Systems == | ||

| Line 26: | Line 27: | ||

| $HOME | | $HOME | ||

| /user/abcd1234 | | /user/abcd1234 | ||

| | | ESS | ||

| NFS over 10GE | | NFS over 10GE | ||

| yes | | yes | ||

| Line 34: | Line 35: | ||

| Data | | Data | ||

| $DATA | | $DATA | ||

| /data/abcd1234 | | /nfs/data/abcd1234 | ||

| | | ESS | ||

| | | NFS over 10GE | ||

| | | yes | ||

| important data from simulations for | | important data from simulations for long term (project duration) storage | ||

| | | access from the compute nodes is slow but possible, can be mounted on local workstation | ||

|- | |||

| Group | |||

| $GROUP | |||

| /nfs/group/<groupname> | |||

| ESS | |||

| NFS over 10GE | |||

| yes | |||

| similar to $DATA but for data shared within a group | |||

| available upon request to [mailto:hpcsupport@uni-oldenburg.de Scientific Computing] | |||

|- | |- | ||

| Work | | Work | ||

| $WORK | | $WORK | ||

| /work/abcd1234 | | /gss/work/abcd1234 | ||

| | | GSS | ||

| FDR Infiniband | | FDR Infiniband | ||

| no | | no | ||

| data storage for | | data storage for simulations at runtime, for pre- and post-processing, short term (weeks) storage | ||

| parallel file-system for fast read/write access from compute nodes, temporarily store larger amounts of data | | parallel file-system for fast read/write access from compute nodes, temporarily store larger amounts of data | ||

|- | |- | ||

| Line 59: | Line 69: | ||

| directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed | | directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed | ||

|- | |- | ||

| Burst Buffer | |||

| $NVMESHDIR | |||

| /nvmesh/<job-specific-dir> | |||

| NVMe Server | |||

| FDR Infiniband | |||

| no | |||

| temporary data storage during job runtime, very high I/O performance | |||

| directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed, currently available for testing | |||

|- | |||

| Offsite | |||

| $OFFSITE | |||

| /nfs/offsite/user/abcd1234 | |||

| ESS | |||

| NFS over 10G | |||

| yes | |||

| long-term data storage, use for data currently not actively needed (project finished) | |||

| only available on the login nodes and as SMB share, access can be slower in the future | |||

|- | |||

|} | |} | ||

</center> | </center> | ||

==== Snapshots on the ESS ==== | |||

Snapshots are enables in some of the directories on the ESS, namely $DATA, $GROUP, and $HOME. You can find these snapshots by changing to the directory ''.snapshots'' with the command | |||

cd .snapshots | |||

If you look at whats in this directory, you will find folders name liked this: '''ess-data-2017-XX-XX'''. In these folders is a snapshot of every $DATA and $HOME directory from every user. | |||

''The following applies to $HOME:'' | |||

The snapshot creation intervals change over time. | |||

Snapshots of the current day are taken hourly, so you can quickly correct recent errors. | |||

However, the hourly snapshots are deleted the following day, so the hourly interval only applies to the current day. One snapshot per day remains for one month, however in order to recover files even after a longer period of time. | |||

For $DATA a simpler principle applies: Here a snapshot is created once per day for 30 days. | |||

After one month, the oldest snapshots are being deleted. That means, that this is not a long-time backup solution. | |||

===== Example ===== | |||

''On the 17th of May, our IT assistant tried to optimize a script but ended up making it unusable. Unfortunately, he didn't made any backup and saved his 'progress'. Now he could either try to fix the code, or he could make use of our storage systems' snapshot feature. Wisely, he chose the latter and restored a copy from the 16th of May.'' <br/> | |||

<br/> | |||

[erle1100@hpcl004 JobScriptExamples]$ cd .snapshots <br/> | |||

[erle1100@hpcl004 .snapshots]$ ll <br/> | |||

total 43 <br/> | |||

''[... shortened for the sake of clarity ...]'' <br/> | |||

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.15-21.00.00-hpc_user-daily <br/> | |||

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.16-21.00.00-hpc_user-daily <br/> | |||

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-08.00.00-hpc_user-hourly <br/> | |||

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-09.00.00-hpc_user-hourly <br/> | |||

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-10.00.00-hpc_user-hourly <br/> | |||

[erle1100@hpcl004 .snapshots]$ cd @GMT-2019.05.16-21.00.00-hpc_user-daily/ <br/> | |||

[erle1100@hpcl004 @GMT-2019.05.16-21.00.00-hpc_user-daily]$ ll <br/> | |||

total 4 <br/> | |||

drwxr-xr-x 4 erle1100 hrz 4096 May 2 04:58 CHEM <br/> | |||

drwxr-xr-x 2 erle1100 hrz 4096 May 2 04:58 Perl <br/> | |||

drwxr-xr-x 3 erle1100 hrz 4096 Apr 24 14:47 PHYS <br/> | |||

drwxr-xr-x 2 erle1100 hrz 4096 May 9 14:37 SLURM <br/> | |||

[erle1100@hpcl004 @GMT-2019.05.16-21.00.00-hpc_user-daily]$ cp CHEM/useful_script.sh ../../../CHEM/ && cd ../../../CHEM/ <br/> | |||

== Quotas == | == Quotas == | ||

Quotas are used to limit the storage capacity for each user on each file system (except <tt>$TMPDIR</tt> which is not persistent after job completion). The following table gives an overview of the default quotas. Users with | === Default Quotas === | ||

Quotas are used to limit the storage capacity and also the number of files for each user on each file system (except <tt>$TMPDIR</tt> which is not persistent after job completion). The following table gives an overview of the default quotas. Users with particularly high demand in storage can contact Scientific Computing to have their quotas increased (reasonably and depending on available space). | |||

<center> | <center> | ||

{| style="background-color:#eeeeff;" cellpadding="10" border="1" cellspacing="0" | {| style="background-color:#eeeeff;" cellpadding="10" border="1" cellspacing="0" | ||

|- style="background-color:#ddddff;" | |||

! | |||

! colspan="2" | File size | |||

! colspan="2" | Number of Files | |||

! Grace Period | |||

|- style="background-color:#ddddff;" | |- style="background-color:#ddddff;" | ||

! File System | ! File System | ||

! Soft Quota | |||

! Hard Quota | ! Hard Quota | ||

! Soft Quota | ! Soft Quota | ||

! Hard Quota | |||

! Grace Period | ! Grace Period | ||

|- | |- | ||

| $HOME | | $HOME | ||

| 1 TB | | 1 TB | ||

| | | 10 TB | ||

| | | 500k | ||

| 1M | |||

| 30 days | |||

|- | |- | ||

| $DATA | | $DATA | ||

| | | 20 TB | ||

| 25 TB | |||

| 1M | |||

| 1.5M | |||

| 30 days | |||

|- | |||

| $WORK | |||

| 25 TB | |||

| 50 TB | |||

| none | | none | ||

| none | | none | ||

| 30 days | |||

|- | |- | ||

| $ | | $OFFSITE | ||

| | | 12.5 TB | ||

| | | 15 TB | ||

| 250k | |||

| 300k | |||

| 30 days | | 30 days | ||

|- | |- | ||

| Line 96: | Line 182: | ||

</center> | </center> | ||

A hard limit means, that you cannot write more data to the file system than allowed by your personal quota. Once the quota has been reached any write operation will fail (including those from running simulations). | |||

A soft limit, on the other hand, will only trigger a grace period during which you can still write data to the file system (up to the hard limit). Only when the grace period is over you can no longer write data, again including from running simulations (also note that you cannot write data even if you below the hard but above the soft limit). | |||

That means you can store data for as long as you want while you are below the soft limit. When you get above the soft limit (e.g. during a long, high I/O simulation on $WORK) the grace period starts. You can still produce more data within the grace period and below the hard limit. Once you delete enough of your data on $WORK to get below the soft limit the grace period is reset. This system forces you to clean up data no longer needed on a regular basis and helps to keep the GPFS storage system usable for everyone. | |||

=== Checking your File System Usage === | |||

Once a week (on Mondays at 2am), you will receive an e-mail with your current Quota Report, which lists the current usage as well as the quotas for the different directories. Alternatively, you can always run the command | |||

$ lastquota | |||

on the cluster. Note, that this report is only updated once a day (except for <tt>$WORK</tt> which is updated once per hour). The report could look like this | |||

<pre> | |||

------------------------------------------------------------------------------ | |||

GPFS Quota Report for User: abcd1234 | |||

Time of last quota update: Apr 16 03:07 (WORK: Apr 16 17:00 ) | |||

------------------------------------------------------------------------------ | |||

Filesystem file size soft max grace | |||

file count | |||

------------------------------------------------------------------------------ | |||

$HOME 1.17 TB 1.0 TB 10.0 TB 16 days | |||

0.437M 500k 1M none | |||

$DATA 0.00 TB 20.0 TB 25.0 TB none | |||

1 1M 1.5M none | |||

$OFFSITE 1.21 TB 12.5 TB 15.0 TB none | |||

0.270M 250k 300k 27 days | |||

$GROUP 0.12 - - - | |||

$WORK 37.1 25.0 50.0 expired | |||

------------------------------------------------------------------------------ | |||

</pre> | |||

In this case, the user has several problems: | |||

* In <tt>$HOME</tt> the current use of 1.17TB is above the file size limit of 1TB (soft limit) and a grace period of 16 days remain before no files can written to <tt>$HOME</tt>. The number of files is ok although not much below the soft limit as well. | |||

* In <tt>$OFFSITE</tt> the number of files (0.270M) is above the soft limit of 250k files and here 27 days remain as the grace period. | |||

* In <tt>$WORK</tt> the current use is again too high and here the grace period has already expired. To be able to use <tt>$WORK</tt> again, the user has to move some of the files from <tt>$WORK</tt> to e.g. <tt>$DATA</tt>. | |||

=== Reducing File Sizes and Number of Files === | |||

Typically, during a project, you will run several jobs on the HPC cluster generating output files in <tt>$WORK</tt>. When (a part of) the project is finished you should then move the data files that you need to keep to <tt>$DATA</tt> (if you are still working on the data analysis) or to <tt>$OFFSITE</tt> (if the data can be archived). This should prevent you from hitting the quota limitation on <tt>$WORK</tt> but you might reach the limits on the other directories. | |||

In that case, it is recommended to use (compressed) <tt>tar</tt>-files in order to reduce the number of files and also the size. The <tt>tar</tt>-command can be used to combine many (small) files into a single archive: | |||

$ tar cvf project_xyz.tar project_xyz/ | |||

The above command will create the file <tt>project_xyz.tar</tt> which contains all the files from the directory <tt>project_xyz/</tt> and its subdirectories (the directory structure is also kept). Once the <tt>tar</tt>-file has been created, the original directory can be deleted. If you want to unpack the <tt>tar</tt>-file you can use the command | |||

$ tar xvf project_xyz.tar | |||

which will unpack all the files into the directory <tt>project_xyz</tt>. It is also possible to extract only specific files. The <tt>tar</tt>-command allows you to greatly reduce the number of files to store (which may also reduce the total usage of the storage). | |||

The file size can be reduced by compressing the file which can be done with programs like <tt>gzip</tt> or <tt>bzip2</tt>. We recommend to use the command [https://github.com/facebook/zstd <tt>zstd</tt>] as it is fast and makes use of threading. The <tt>tar</tt>-file from above (or any other file) can be compressed with | |||

$ zstd -T0 --rm project_xyz.tar | |||

which will create the compressed file <tt>project_xyz.tar.zst</tt> and remove the original one afterwards (to keep it you can omit the <tt>--rm</tt>-option. To decompress the file again use the command | |||

$ zstd -d --rm project_xyz.tar.zst | |||

to obtain the original <tt>tar</tt>-file. It is also possible to combine <tt>tar</tt> and <tt>zstd</tt> in a single command with | |||

$ tar cf - project_xyz/ | zstd -T0 > project_xyz.tar.zst | |||

Whether you need to create a <tt>tar</tt>-file depends on how many files you have to store but in general a <tt>tar</tt>-file can be handled more easily (and requires fewer resources from the file system). Compression is always useful but the compression rate could be small depending on the type of data. | |||

== I/O-intensive Applications == | |||

The <tt>$WORK</tt>-directory on the parallel file system (GPFS) is the primary file system for I/O from the compute nodes. However, the GPFS cannot handle certain I/O-demands (random I/O, many small read or write operations) very well, in which case other I/O-devices should be used (to get better performance for yourself and also to not affect other users). For this purpose, the computes nodes in CARL are equipped with local disks or local NVMes which can be requested as a generic resource. In addition, a server equipped with 4 NVMes can be used as a burst buffer. | |||

=== Local Scratch Disks / TMPDIR === | |||

In addition to the storage in <tt>$WORK</tt>, the compute nodes in CARL also have local space called <tt>$TMPDIR</tt>. This local storage device can only be accessed during the run time of a job. When the job is started, a job-specific directory is created which can be used through the environment variable <tt>$TMPDIR</tt>. The amount of space is different for every node type: | |||

* '''mpcs-nodes''': approx. 800 GB (HDD) | |||

* '''mpcl-nodes''': approx. 800 GB (HDD) | |||

* '''mpcp-nodes''': approx. 1.7 TB (SSD) | |||

* '''mpcb-nodes''': approx. 1.7 TB (SSD) | |||

* '''mpcg-nodes''': approx. 800 GB (HDD) | |||

'''Important note''': This storage space is only used for temporary created files during the execution of the job. After the job has finished these files will be deleted. If you need theses files, you have to add a "copy"-command to your jobscript, e.g. | |||

cp $TMPDIR/54321_abcd1234/important_file.txt $DATA/54321_abcd1234/ | |||

If you need local storage for your job, please add the following line to your jobscript | |||

#SBATCH --gres=tmpdir:100G | |||

This will reserve 100GB local, temporary storage for your job. | |||

Keep in mind that you can add only one gres ('''g'''eneric '''res'''ource) per jobscript. Multiple lines will not be accepted. If you need an additional GPU for your job, the "--gres"-command should look like this: | |||

#SBATCH --gres=tmpdir:100G,gpu:1 | |||

=== Burst Buffer === | |||

In order to prepare for HPC cluster following CARL, we have added a server with four NVMes to the cluster which provides a so-called burst buffer. The internal I/O-bandwidth of this server is about 12GB/s and it is connected with two FDR Infiniband links, which can also provide a throughput of 12GB/s. For a single compute node, the bandwidth is limited to 6GB/s (single FDR link). The total capacity of the system is 5900GB and a volume with the required size can be requested in a job. | |||

At the moment, this server can be accessed only from a couple of compute nodes (mainly <tt>mpcb008</tt> and <tt>mpcb017</tt>) and upon request to {{sc}}. The following (incomplete) job script shows how this burst buffer can be used: | |||

<pre> | |||

#!/bin/bash | |||

#SBATCH -p carl.p | |||

#SBATCH ... | |||

#SBATCH --license nvmesh_gb:500 # size of the burst buffer in GB | |||

... | |||

# change to burst buffer and run I/O-intensive application | |||

cd $NVMESHDIR | |||

ioapp # this assumes I/O is done in work dir | |||

# to keep final result copy it to $WORK | |||

cp result.dat $WORK/ | |||

</pre> | |||

Using the burst buffer is quite similar to using <tt>$TMPDIR</tt>: | |||

# You need to request a volume on the burst buffer in the required size. This is done in form of a license request and one <tt>nvmesh_gb</tt> is equivalent to 1GB. The total number of licenses/GB is 5900 so jobs can only start if enough licenses (space on the device) are available. | |||

# In your job script, you need to make sure I/O is done to <tt>NVMESHDIR</tt>. This depends on your application but it might involve <tt>cd</tt>-ing into the directory and/or copying initial data to it. | |||

# When the job is completed, the directory <tt>NVMESHDIR</tt> will be deleted automatically and forever, so any files you need to keep have to be copied to <tt>$WORK</tt> or some other place (<tt>$WORK</tt> is probably the fastest at that point). | |||

== rsync: File transfer between different user accounts == | |||

Sometimes you may want to exchange files from one account to another. This often applies to users who had to change their account, for example because they switched from their student account to their new employee account. | |||

In this case, you may want to use ''rsync'' when logged in to your old account: | |||

rsync -avz $HOME/source_directory abcd1234@carl.hpc.uni-oldenburg.de:/user/abcd1234/target_directory | |||

Where ''abcd1234'' is the new account. | |||

You will have to type in the password of the targeted account to proceed with the command. | |||

* '''-a''' mandatory. It transfers the access rights to the new user. | |||

* '''-v''' optional. You will see every action for every copied file that rsync moves. This can spam your whole terminal session, so you may want to redirect the output into a file, if you want to keep track of every copied file afterwards. | |||

** rsync -avz $HOME/source_directory abcd1234@carl.hpc.uni-oldenburg.de:/user/abcd1234/target_directory > copy-log.txt 2>&1 | |||

* '''-z''' optional. Reduces the amount of data to be transferred. | |||

== Managing access rights of your folders == | == Managing access rights of your folders == | ||

| Line 116: | Line 306: | ||

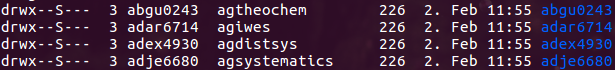

<span style="background:yellow">drwx--S---</span> | <span style="background:yellow">drwx--S---</span> | ||

*the first letter marks the filetype, in this case its a "'''d'''" which means we are looking at | *the first letter marks the filetype, in this case its a "'''d'''" which means we are looking at a '''directory'''. | ||

*the following chars are the access rights: "'''rwx'''--S---" means: Only the owner can '''r'''ead, '''w'''rite and e'''x'''ecute the folder and everything thats in it. | *the following chars are the access rights: "'''rwx'''--S---" means: Only the owner can '''r'''ead, '''w'''rite and e'''x'''ecute the folder and everything thats in it. | ||

*The "'''S'''" stands for "Set Group ID". This will, additional to the rights of the executing (read and write respectively) User, apply the rights of the group which owns the filed/directory etc. '''This was an temporary option to ensure safety while we were opening the cluster for the public. Its possible that the "S" isnt set when you are looking at this guide, that is okay and intended.''' | *The "'''S'''" stands for "Set Group ID". This will, additional to the rights of the executing (read and write respectively) User, apply the rights of the group which owns the filed/directory etc. '''This was an temporary option to ensure safety while we were opening the cluster for the public. Its possible that the "S" isnt set when you are looking at this guide, that is okay and intended.''' | ||

| Line 125: | Line 315: | ||

<span style="background:green">agtheochem</span> | <span style="background:green">agtheochem</span> | ||

*current group of the file/directory | *current group of the file/directory | ||

*this is your primary group. You can check your secondary group with the command "groups $(whoami)". It will output something like this: "abcd1234: group1 group2". | |||

<span style="background:pink">abgu0243</span> | <span style="background:pink">abgu0243</span> | ||

| Line 131: | Line 322: | ||

Basically we will need three commands to modify our access rights on the cluster: | Basically we will need three commands to modify our access rights on the cluster: | ||

*chmod | *chmod | ||

*chgrp | |||

*chown | *chown | ||

You will most likey just need one or maybe two of these commands, nevertheless we listed and described them for the integrity of this wiki. | |||

=== chmod === | |||

The command ''chmod'' will directly modify the access rights of your desired file/directory. ''chmod'' has two different modes, symbolic- and octal-mode, but the syntaxes are pretty much the same: | The command ''chmod'' will directly modify the access rights of your desired file/directory. ''chmod'' has two different modes, symbolic- and octal-mode, but the syntaxes are pretty much the same: | ||

| Line 146: | Line 339: | ||

chmod [OPTION]... OCTAL‐MODE FILE... | chmod [OPTION]... OCTAL‐MODE FILE... | ||

The following table will show the three different | The following table will show the three different user categories for each of the modes. | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 172: | Line 365: | ||

Possible access rights are: '''r''' = read, '''w''' = write, '''x''' = execute | Possible access rights are: '''r''' = read, '''w''' = write, '''x''' = execute | ||

===== Examples for the symbolic mode ===== | |||

*allow your group to read, but not to write or execute your file | *allow your group to read, but not to write or execute your file | ||

| Line 184: | Line 377: | ||

chmod o=rw yourfile.txt or chmod o+rw yourfile.txt | chmod o=rw yourfile.txt or chmod o+rw yourfile.txt | ||

===== Examples for the octal mode ===== | |||

| Line 191: | Line 384: | ||

*allow your group to read, but not to write or execute your file (owner can still read, write and execute) | *allow your group to read, but not to write or execute your file (owner can still read, write and execute) | ||

chmod 744 yourfile.txt | chmod 744 yourfile.txt | ||

*allow other users to read and write, but not to execute your file | *allow other users to read and write, but not to execute your file | ||

| Line 198: | Line 391: | ||

An easy way to calculate these numbers is using the following tool: [http://chmod-calculator.com/ chmod-calculator.com] | An easy way to calculate these numbers is using the following tool: [http://chmod-calculator.com/ chmod-calculator.com] | ||

==== chgrp ==== | |||

The command ''chgrp'' (or '''ch'''ange '''gr'''ou'''p''') will change the group of your file/directory. The syntax of the command looks like this: | |||

chgrp [OPTION] GROUP FILE | |||

'''Example''' | |||

Change the group of your file/directory | |||

chgrp yourgrp /user/abcd1234/randomdirectory | |||

If you are reading this part of the wiki, you might be looking for a way to change the group of your files after you changed your unix-group. A short example on how to do that can be found [https://wiki.hpcuser.uni-oldenburg.de/index.php?title=Quickstart_Guide#Account_creation here.] | |||

==== chown ==== | ==== chown ==== | ||

| Line 205: | Line 412: | ||

chown [OPTION]... [OWNER][:[GROUP FILE... | chown [OPTION]... [OWNER][:[GROUP FILE... | ||

==== | '''Note:''' In the most cases you will not need to use this command! | ||

=== Examples on granting and restricting access to your files === | |||

* How can I grant access to a specific folder? | |||

Access rights must be set recursively in Linux up to the $HOME path (/user/abce1234) . For example, suppose that the subfolder folder2 should be shared. With the following command alone, access would not yet be possible: | |||

chmod o+r $USER/folder1/folder2 # negative example | |||

Other users will still be denied access because there is no permission for the preceding folders ''$HOME'' and ''folder1''. | |||

This means that each folder that has to be passed through needs execute permissions (+x) to ensure access to the last folder in the chain no matter if read (+r) write (+w) or execute permissions (+x) . | |||

So to get read access to folder2, the following command must be executed: | |||

chmod o+xr $USER $USER/folder1/folder2 | |||

chmod o+x $USER $USER/folder1 | |||

*How can I grant access to a file/folder for another member of my primary group? | |||

In case you want to solely give permission to your group members, just replace ''o+x'' with ''g+x'': | |||

chmod g+x $HOME | |||

chmod g+x $HOME/your_folder | |||

:Members of your group will only see the folder that you mentioned, other folders aren't visible. | |||

:Adding "rx" will grant the same rights, but everything else is visible (not accessible though). Please note, that the +xr privileges allow the group members to copy the corresponding files into their own directories. | |||

chmod g+rx $HOME/your_folder | |||

*How can I grant access to a file/folder for another member of one of my secondary groups? | |||

chgrp -R your_secondary_group $HOME/your_folder | |||

:This will grant "your_secondary_group" the set rights for this group to your folder "$HOME/your_folder". | |||

*How can I grant access to a file/folder for anyone without anyone being able to access files/folders stored in the same directory? | |||

chmod o+x $HOME/your_folder | |||

'''NOTE: We used "+" in every example above. Remember that using "+" (instead of "=", which overwrites rights) will keep all other rights that are applied to the file/folder and will only change the ones you added!''' | |||

*How can I prevent anyone else from reading, modifying, or even seeing files/directories in my directories? | |||

chmod u=rwx $HOME/your_folder | |||

== Storage Systems Best Practice == | == Storage Systems Best Practice == | ||

| Line 216: | Line 462: | ||

* If possible avoid writing many small files to any file system. | * If possible avoid writing many small files to any file system. | ||

* Clean up your data regularly. | * Clean up your data regularly. | ||

== Compressing and Moving Files to $DATA or $OFFSITE == | |||

The GPFS for $WORK only offers a limited amount of storage space to store data files that are actively needed for processing with the HPC cluster. Any data files no longer actively used should be moved to <tt>$DATA</tt> or <tt>$OFFSITE</tt> to free space in the <tt>$WORK</tt> file systems/directories. The <tt>$OFFSITE</tt> directory should be used if you want to keep data files from a finished project (i.e. if you want to archive the data files). Currently, $OFFSITE is located on the ESS file system but a different (slower and less expensive) storage system may be used in the future. The default quota for $OFFSITE is 12.5TB. | |||

The following steps are recommended to move data files to <tt>$OFFSITE</tt> (and likewise to <tt>$DATA</tt>): | |||

# Collect all files/directories in a separate directory, e.g. <tt>project_xyz</tt> (create a new one if needed). Make sure to only collect the data files that you absolutely must keep. Make sure that a description of the data is available, too. | |||

# Consider to pack the directory <tt>project_xyz</tt> into a single archive, e.g. using the command <pre>$ tar cvf $OFFSITE/project_xyz.tar project_xyz/ > $OFFSITE/project_xyz.file.lst</pre> in particular if you have many individual files less than 1MB in size. With this command, the archive is created directly in your $OFFSITE directory and you can skip the <tt>rsync</tt>-step below. In addition, a text file <tt>project_xyz.file.lst</tt> is created next to the archive listing all the files and directories in the archive. | |||

# Consider compressing your data files or the archive from the previous step, e.g. with <pre>$ zstd -T0 --rm $OFFSITE/project_xyz.tar</pre> (not that the original tar-file is deleted after successful compression) or alternatively use compression when creating the archive, for example with the command <pre>$ tar -I zstd -cvf $OFFSITE/project_xyz.tar.zst project_xyz/ > $OFFSITE/project_xyz.file.lst</pre>. If you have already used a compressed format for your data files, this step should be skipped. | |||

# ''Optional'': Use rsync to copy your (archived and compressed) directory to $OFFSITE<pre>$ rsync -av project_xyz.tar.zst $OFFSITE</pre> as you can restart with the same command in case the process gets interrupted. Avoid copying data during the usual office hours. | |||

# Create a README file or similar that briefly explains what can be found in <tt>project_xyz.tar.rst</tt> and the reason for keeping it stored. | |||

# ''Optional'': after the rsync has completed you can make a sanity check using e.g. <pre>$ md5sum project_xyz.tar.gz</pre> on both the original and the copied file(s). The resulting checksum should be identical, if not the rsync process was most likely aborted and should be restarted. If you created a <tt>tar</tt>-file directly in $OFFSITE, you can check its integrity with the command<pre>$ tar -I zstd -tf project_xyz.tar.zst 2> /dev/null && echo "no error"</pre>which should print <tt>no error</tt> if everything is ok. | |||

# If you are sure the files have been copied correctly, you can delete the original ones to free space on the file system. | |||

# ''Optional'': remove the write-permissions from all the files and directories in $OFFSITE <pre>$ chmod a-w project_xyz.tar.zst</pre> for a single file or <pre>$ chmod -R a-w project_xyz/<pre> for a full directory. | |||

The main idea of $OFFSITE is that once a file or directory is copied there it will not be changed afterwards. Please note, that the data in $OFFSITE will be deleted sometime after your account has been deactivated. | |||

Latest revision as of 09:07, 4 April 2022

The HPC cluster offers access to two important file systems, namely a parallel file system connected to the communication network and an NFS-mounted file system connected via the campus network. In additions, the compute nodes of the CARL cluster have local storage devices for high I/O demands. The different file systems available are documented below. Please follow the guidelines given for best pratice below.

Storage Hardware

A GPFS Storage Server (GSS) serves as a parallel file system for the HPC cluster. The total (net) capacity of this file system is about 900 TB and the read/write performance is up to 17/12 GB/s over FDR Infiniband. It is possible to mount the GPFS on your local machine using SMB/NFS (via the 10GE campus network). The GSS should be used as the primary storage device for HPC, in particular for data that is read/written by the compute nodes. The GSS offers no backup functionality (i.e. deleted data can not be recovered).

The central storage system of the IT services is used to provide the NFS-mounted $HOME-directories and other directories, namely $DATA, $GROUP, and $OFFSITE. The central storage system is provided by an IBM Elastic Storage Server (ESS). It offers very high availability, snapshots, and backup and should be used for long-term storage, in particular everything that cannot be recovered easily (program codes, initial conditions, final results, ...).

File Systems

The following file systems are available on the cluster

| File System | Environment Variable | Path | Device | Data Transfer | Backup | Used for | Comments |

|---|---|---|---|---|---|---|---|

| Home | $HOME | /user/abcd1234 | ESS | NFS over 10GE | yes | critical data that cannot easily be reproduced (program codes, initial conditions, results from data analysis) | high-availability file-system, snapshot functionality, can be mounted on local workstation |

| Data | $DATA | /nfs/data/abcd1234 | ESS | NFS over 10GE | yes | important data from simulations for long term (project duration) storage | access from the compute nodes is slow but possible, can be mounted on local workstation |

| Group | $GROUP | /nfs/group/<groupname> | ESS | NFS over 10GE | yes | similar to $DATA but for data shared within a group | available upon request to Scientific Computing |

| Work | $WORK | /gss/work/abcd1234 | GSS | FDR Infiniband | no | data storage for simulations at runtime, for pre- and post-processing, short term (weeks) storage | parallel file-system for fast read/write access from compute nodes, temporarily store larger amounts of data |

| Scratch | $TMPDIR | /scratch/<job-specific-dir> | local disk or SSD | local | no | temporary data storage during job runtime | directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed |

| Burst Buffer | $NVMESHDIR | /nvmesh/<job-specific-dir> | NVMe Server | FDR Infiniband | no | temporary data storage during job runtime, very high I/O performance | directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed, currently available for testing |

| Offsite | $OFFSITE | /nfs/offsite/user/abcd1234 | ESS | NFS over 10G | yes | long-term data storage, use for data currently not actively needed (project finished) | only available on the login nodes and as SMB share, access can be slower in the future |

Snapshots on the ESS

Snapshots are enables in some of the directories on the ESS, namely $DATA, $GROUP, and $HOME. You can find these snapshots by changing to the directory .snapshots with the command

cd .snapshots

If you look at whats in this directory, you will find folders name liked this: ess-data-2017-XX-XX. In these folders is a snapshot of every $DATA and $HOME directory from every user.

The following applies to $HOME: The snapshot creation intervals change over time. Snapshots of the current day are taken hourly, so you can quickly correct recent errors. However, the hourly snapshots are deleted the following day, so the hourly interval only applies to the current day. One snapshot per day remains for one month, however in order to recover files even after a longer period of time.

For $DATA a simpler principle applies: Here a snapshot is created once per day for 30 days.

After one month, the oldest snapshots are being deleted. That means, that this is not a long-time backup solution.

Example

On the 17th of May, our IT assistant tried to optimize a script but ended up making it unusable. Unfortunately, he didn't made any backup and saved his 'progress'. Now he could either try to fix the code, or he could make use of our storage systems' snapshot feature. Wisely, he chose the latter and restored a copy from the 16th of May.

[erle1100@hpcl004 JobScriptExamples]$ cd .snapshots

[erle1100@hpcl004 .snapshots]$ ll

total 43

[... shortened for the sake of clarity ...]

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.15-21.00.00-hpc_user-daily

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.16-21.00.00-hpc_user-daily

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-08.00.00-hpc_user-hourly

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-09.00.00-hpc_user-hourly

drwxr-xr-x 6 erle1100 hrz 4096 May 9 14:10 @GMT-2019.05.17-10.00.00-hpc_user-hourly

[erle1100@hpcl004 .snapshots]$ cd @GMT-2019.05.16-21.00.00-hpc_user-daily/

[erle1100@hpcl004 @GMT-2019.05.16-21.00.00-hpc_user-daily]$ ll

total 4

drwxr-xr-x 4 erle1100 hrz 4096 May 2 04:58 CHEM

drwxr-xr-x 2 erle1100 hrz 4096 May 2 04:58 Perl

drwxr-xr-x 3 erle1100 hrz 4096 Apr 24 14:47 PHYS

drwxr-xr-x 2 erle1100 hrz 4096 May 9 14:37 SLURM

[erle1100@hpcl004 @GMT-2019.05.16-21.00.00-hpc_user-daily]$ cp CHEM/useful_script.sh ../../../CHEM/ && cd ../../../CHEM/

Quotas

Default Quotas

Quotas are used to limit the storage capacity and also the number of files for each user on each file system (except $TMPDIR which is not persistent after job completion). The following table gives an overview of the default quotas. Users with particularly high demand in storage can contact Scientific Computing to have their quotas increased (reasonably and depending on available space).

| File size | Number of Files | Grace Period | |||

|---|---|---|---|---|---|

| File System | Soft Quota | Hard Quota | Soft Quota | Hard Quota | Grace Period |

| $HOME | 1 TB | 10 TB | 500k | 1M | 30 days |

| $DATA | 20 TB | 25 TB | 1M | 1.5M | 30 days |

| $WORK | 25 TB | 50 TB | none | none | 30 days |

| $OFFSITE | 12.5 TB | 15 TB | 250k | 300k | 30 days |

A hard limit means, that you cannot write more data to the file system than allowed by your personal quota. Once the quota has been reached any write operation will fail (including those from running simulations).

A soft limit, on the other hand, will only trigger a grace period during which you can still write data to the file system (up to the hard limit). Only when the grace period is over you can no longer write data, again including from running simulations (also note that you cannot write data even if you below the hard but above the soft limit).

That means you can store data for as long as you want while you are below the soft limit. When you get above the soft limit (e.g. during a long, high I/O simulation on $WORK) the grace period starts. You can still produce more data within the grace period and below the hard limit. Once you delete enough of your data on $WORK to get below the soft limit the grace period is reset. This system forces you to clean up data no longer needed on a regular basis and helps to keep the GPFS storage system usable for everyone.

Checking your File System Usage

Once a week (on Mondays at 2am), you will receive an e-mail with your current Quota Report, which lists the current usage as well as the quotas for the different directories. Alternatively, you can always run the command

$ lastquota

on the cluster. Note, that this report is only updated once a day (except for $WORK which is updated once per hour). The report could look like this

------------------------------------------------------------------------------

GPFS Quota Report for User: abcd1234

Time of last quota update: Apr 16 03:07 (WORK: Apr 16 17:00 )

------------------------------------------------------------------------------

Filesystem file size soft max grace

file count

------------------------------------------------------------------------------

$HOME 1.17 TB 1.0 TB 10.0 TB 16 days

0.437M 500k 1M none

$DATA 0.00 TB 20.0 TB 25.0 TB none

1 1M 1.5M none

$OFFSITE 1.21 TB 12.5 TB 15.0 TB none

0.270M 250k 300k 27 days

$GROUP 0.12 - - -

$WORK 37.1 25.0 50.0 expired

------------------------------------------------------------------------------

In this case, the user has several problems:

- In $HOME the current use of 1.17TB is above the file size limit of 1TB (soft limit) and a grace period of 16 days remain before no files can written to $HOME. The number of files is ok although not much below the soft limit as well.

- In $OFFSITE the number of files (0.270M) is above the soft limit of 250k files and here 27 days remain as the grace period.

- In $WORK the current use is again too high and here the grace period has already expired. To be able to use $WORK again, the user has to move some of the files from $WORK to e.g. $DATA.

Reducing File Sizes and Number of Files

Typically, during a project, you will run several jobs on the HPC cluster generating output files in $WORK. When (a part of) the project is finished you should then move the data files that you need to keep to $DATA (if you are still working on the data analysis) or to $OFFSITE (if the data can be archived). This should prevent you from hitting the quota limitation on $WORK but you might reach the limits on the other directories.

In that case, it is recommended to use (compressed) tar-files in order to reduce the number of files and also the size. The tar-command can be used to combine many (small) files into a single archive:

$ tar cvf project_xyz.tar project_xyz/

The above command will create the file project_xyz.tar which contains all the files from the directory project_xyz/ and its subdirectories (the directory structure is also kept). Once the tar-file has been created, the original directory can be deleted. If you want to unpack the tar-file you can use the command

$ tar xvf project_xyz.tar

which will unpack all the files into the directory project_xyz. It is also possible to extract only specific files. The tar-command allows you to greatly reduce the number of files to store (which may also reduce the total usage of the storage).

The file size can be reduced by compressing the file which can be done with programs like gzip or bzip2. We recommend to use the command zstd as it is fast and makes use of threading. The tar-file from above (or any other file) can be compressed with

$ zstd -T0 --rm project_xyz.tar

which will create the compressed file project_xyz.tar.zst and remove the original one afterwards (to keep it you can omit the --rm-option. To decompress the file again use the command

$ zstd -d --rm project_xyz.tar.zst

to obtain the original tar-file. It is also possible to combine tar and zstd in a single command with

$ tar cf - project_xyz/ | zstd -T0 > project_xyz.tar.zst

Whether you need to create a tar-file depends on how many files you have to store but in general a tar-file can be handled more easily (and requires fewer resources from the file system). Compression is always useful but the compression rate could be small depending on the type of data.

I/O-intensive Applications

The $WORK-directory on the parallel file system (GPFS) is the primary file system for I/O from the compute nodes. However, the GPFS cannot handle certain I/O-demands (random I/O, many small read or write operations) very well, in which case other I/O-devices should be used (to get better performance for yourself and also to not affect other users). For this purpose, the computes nodes in CARL are equipped with local disks or local NVMes which can be requested as a generic resource. In addition, a server equipped with 4 NVMes can be used as a burst buffer.

Local Scratch Disks / TMPDIR

In addition to the storage in $WORK, the compute nodes in CARL also have local space called $TMPDIR. This local storage device can only be accessed during the run time of a job. When the job is started, a job-specific directory is created which can be used through the environment variable $TMPDIR. The amount of space is different for every node type:

- mpcs-nodes: approx. 800 GB (HDD)

- mpcl-nodes: approx. 800 GB (HDD)

- mpcp-nodes: approx. 1.7 TB (SSD)

- mpcb-nodes: approx. 1.7 TB (SSD)

- mpcg-nodes: approx. 800 GB (HDD)

Important note: This storage space is only used for temporary created files during the execution of the job. After the job has finished these files will be deleted. If you need theses files, you have to add a "copy"-command to your jobscript, e.g.

cp $TMPDIR/54321_abcd1234/important_file.txt $DATA/54321_abcd1234/

If you need local storage for your job, please add the following line to your jobscript

#SBATCH --gres=tmpdir:100G

This will reserve 100GB local, temporary storage for your job.

Keep in mind that you can add only one gres (generic resource) per jobscript. Multiple lines will not be accepted. If you need an additional GPU for your job, the "--gres"-command should look like this:

#SBATCH --gres=tmpdir:100G,gpu:1

Burst Buffer

In order to prepare for HPC cluster following CARL, we have added a server with four NVMes to the cluster which provides a so-called burst buffer. The internal I/O-bandwidth of this server is about 12GB/s and it is connected with two FDR Infiniband links, which can also provide a throughput of 12GB/s. For a single compute node, the bandwidth is limited to 6GB/s (single FDR link). The total capacity of the system is 5900GB and a volume with the required size can be requested in a job.

At the moment, this server can be accessed only from a couple of compute nodes (mainly mpcb008 and mpcb017) and upon request to Scientific Computing. The following (incomplete) job script shows how this burst buffer can be used:

#!/bin/bash #SBATCH -p carl.p #SBATCH ... #SBATCH --license nvmesh_gb:500 # size of the burst buffer in GB ... # change to burst buffer and run I/O-intensive application cd $NVMESHDIR ioapp # this assumes I/O is done in work dir # to keep final result copy it to $WORK cp result.dat $WORK/

Using the burst buffer is quite similar to using $TMPDIR:

- You need to request a volume on the burst buffer in the required size. This is done in form of a license request and one nvmesh_gb is equivalent to 1GB. The total number of licenses/GB is 5900 so jobs can only start if enough licenses (space on the device) are available.

- In your job script, you need to make sure I/O is done to NVMESHDIR. This depends on your application but it might involve cd-ing into the directory and/or copying initial data to it.

- When the job is completed, the directory NVMESHDIR will be deleted automatically and forever, so any files you need to keep have to be copied to $WORK or some other place ($WORK is probably the fastest at that point).

rsync: File transfer between different user accounts

Sometimes you may want to exchange files from one account to another. This often applies to users who had to change their account, for example because they switched from their student account to their new employee account.

In this case, you may want to use rsync when logged in to your old account:

rsync -avz $HOME/source_directory abcd1234@carl.hpc.uni-oldenburg.de:/user/abcd1234/target_directory

Where abcd1234 is the new account. You will have to type in the password of the targeted account to proceed with the command.

- -a mandatory. It transfers the access rights to the new user.

- -v optional. You will see every action for every copied file that rsync moves. This can spam your whole terminal session, so you may want to redirect the output into a file, if you want to keep track of every copied file afterwards.

- rsync -avz $HOME/source_directory abcd1234@carl.hpc.uni-oldenburg.de:/user/abcd1234/target_directory > copy-log.txt 2>&1

- -z optional. Reduces the amount of data to be transferred.

Managing access rights of your folders

Different to the directory structure on HERO and FLOW, the folder hierarchy on CARL and EDDY is flat and less clustered together. We no longer have multiple directories stacked in each other. This leads to an inevitable change in the access right management. If you dont change the access rights for your directory on the cluster, the command "ls -l /user" will show you something like this:

If you look at the first line shown in the screenshot above, you can see the following informations:

drwx--S--- 3 abgu0243 agtheochem 226 2. Feb 11:55 abgu0243

drwx--S---

- the first letter marks the filetype, in this case its a "d" which means we are looking at a directory.

- the following chars are the access rights: "rwx--S---" means: Only the owner can read, write and execute the folder and everything thats in it.

- The "S" stands for "Set Group ID". This will, additional to the rights of the executing (read and write respectively) User, apply the rights of the group which owns the filed/directory etc. This was an temporary option to ensure safety while we were opening the cluster for the public. Its possible that the "S" isnt set when you are looking at this guide, that is okay and intended.

abgu0243

- current owner of the file/directory

agtheochem

- current group of the file/directory

- this is your primary group. You can check your secondary group with the command "groups $(whoami)". It will output something like this: "abcd1234: group1 group2".

abgu0243

- current name of the file/directory

Basically we will need three commands to modify our access rights on the cluster:

- chmod

- chgrp

- chown

You will most likey just need one or maybe two of these commands, nevertheless we listed and described them for the integrity of this wiki.

chmod

The command chmod will directly modify the access rights of your desired file/directory. chmod has two different modes, symbolic- and octal-mode, but the syntaxes are pretty much the same:

- symbolic mode

chmod [OPTION]... MODE[,MODE]... FILE...

- octal-mode

chmod [OPTION]... OCTAL‐MODE FILE...

The following table will show the three different user categories for each of the modes.

| Usertype | Symbolic mode | Octal-mode |

|---|---|---|

| Owner of the file | u | 1. digit |

| Group of the file | g | 2. digit |

| Other users | o | 3. digit |

| Owner, group and others | a |

Possible access rights are: r = read, w = write, x = execute

Examples for the symbolic mode

- allow your group to read, but not to write or execute your file

chmod g=r yourfile.txt

- "=" will always clear every access right and set the ones you want. For example, if the file mentioned above was readable, writable and executable, the command "chmod g=r yourfile.txt" will make the file only readable. Beside that you can use "+" to add specific rights and "-" to remove them.

- allow other users to read and write, but not to execute your file

chmod o=rw yourfile.txt or chmod o+rw yourfile.txt

Examples for the octal mode

For comparison, we will be using the same examples as in the symbolic mode shown above:

- allow your group to read, but not to write or execute your file (owner can still read, write and execute)

chmod 744 yourfile.txt

- allow other users to read and write, but not to execute your file

chmod 766 yourfile.txt

An easy way to calculate these numbers is using the following tool: chmod-calculator.com

chgrp

The command chgrp (or change group) will change the group of your file/directory. The syntax of the command looks like this:

chgrp [OPTION] GROUP FILE

Example

Change the group of your file/directory

chgrp yourgrp /user/abcd1234/randomdirectory

If you are reading this part of the wiki, you might be looking for a way to change the group of your files after you changed your unix-group. A short example on how to do that can be found here.

chown

The command chown (or: change owner) does what you think it does: it changes the owner of a file or directory. The syntax of the command looks like this:

chown [OPTION]... [OWNER][:[GROUP FILE...

Note: In the most cases you will not need to use this command!

Examples on granting and restricting access to your files

- How can I grant access to a specific folder?

Access rights must be set recursively in Linux up to the $HOME path (/user/abce1234) . For example, suppose that the subfolder folder2 should be shared. With the following command alone, access would not yet be possible:

chmod o+r $USER/folder1/folder2 # negative example

Other users will still be denied access because there is no permission for the preceding folders $HOME and folder1. This means that each folder that has to be passed through needs execute permissions (+x) to ensure access to the last folder in the chain no matter if read (+r) write (+w) or execute permissions (+x) .

So to get read access to folder2, the following command must be executed:

chmod o+xr $USER $USER/folder1/folder2 chmod o+x $USER $USER/folder1

- How can I grant access to a file/folder for another member of my primary group?

In case you want to solely give permission to your group members, just replace o+x with g+x:

chmod g+x $HOME chmod g+x $HOME/your_folder

- Members of your group will only see the folder that you mentioned, other folders aren't visible.

- Adding "rx" will grant the same rights, but everything else is visible (not accessible though). Please note, that the +xr privileges allow the group members to copy the corresponding files into their own directories.

chmod g+rx $HOME/your_folder

- How can I grant access to a file/folder for another member of one of my secondary groups?

chgrp -R your_secondary_group $HOME/your_folder

- This will grant "your_secondary_group" the set rights for this group to your folder "$HOME/your_folder".

- How can I grant access to a file/folder for anyone without anyone being able to access files/folders stored in the same directory?

chmod o+x $HOME/your_folder

NOTE: We used "+" in every example above. Remember that using "+" (instead of "=", which overwrites rights) will keep all other rights that are applied to the file/folder and will only change the ones you added!

- How can I prevent anyone else from reading, modifying, or even seeing files/directories in my directories?

chmod u=rwx $HOME/your_folder

Storage Systems Best Practice

Here are some general remarks on using the storage systems efficiently:

- Store all files and data that cannot be reproduced (easily) on $HOME for highest protection.

- Carefully select files and data for storage on $HOME and compress them if possible as storage on $HOME is the most expensive.

- Store large data files that can be reproduced with some effort (re-running a simulation) on $DATA.

- Data created or used during the run-time of a simulation should be stored in $WORK and avoid reading from or writing to $HOME, in particular if you are dealing with large data files.

- If possible avoid writing many small files to any file system.

- Clean up your data regularly.

Compressing and Moving Files to $DATA or $OFFSITE

The GPFS for $WORK only offers a limited amount of storage space to store data files that are actively needed for processing with the HPC cluster. Any data files no longer actively used should be moved to $DATA or $OFFSITE to free space in the $WORK file systems/directories. The $OFFSITE directory should be used if you want to keep data files from a finished project (i.e. if you want to archive the data files). Currently, $OFFSITE is located on the ESS file system but a different (slower and less expensive) storage system may be used in the future. The default quota for $OFFSITE is 12.5TB.

The following steps are recommended to move data files to $OFFSITE (and likewise to $DATA):

- Collect all files/directories in a separate directory, e.g. project_xyz (create a new one if needed). Make sure to only collect the data files that you absolutely must keep. Make sure that a description of the data is available, too.

- Consider to pack the directory project_xyz into a single archive, e.g. using the command

$ tar cvf $OFFSITE/project_xyz.tar project_xyz/ > $OFFSITE/project_xyz.file.lst

in particular if you have many individual files less than 1MB in size. With this command, the archive is created directly in your $OFFSITE directory and you can skip the rsync-step below. In addition, a text file project_xyz.file.lst is created next to the archive listing all the files and directories in the archive. - Consider compressing your data files or the archive from the previous step, e.g. with

$ zstd -T0 --rm $OFFSITE/project_xyz.tar

(not that the original tar-file is deleted after successful compression) or alternatively use compression when creating the archive, for example with the command$ tar -I zstd -cvf $OFFSITE/project_xyz.tar.zst project_xyz/ > $OFFSITE/project_xyz.file.lst

. If you have already used a compressed format for your data files, this step should be skipped. - Optional: Use rsync to copy your (archived and compressed) directory to $OFFSITE

$ rsync -av project_xyz.tar.zst $OFFSITE

as you can restart with the same command in case the process gets interrupted. Avoid copying data during the usual office hours. - Create a README file or similar that briefly explains what can be found in project_xyz.tar.rst and the reason for keeping it stored.

- Optional: after the rsync has completed you can make a sanity check using e.g.

$ md5sum project_xyz.tar.gz

on both the original and the copied file(s). The resulting checksum should be identical, if not the rsync process was most likely aborted and should be restarted. If you created a tar-file directly in $OFFSITE, you can check its integrity with the command$ tar -I zstd -tf project_xyz.tar.zst 2> /dev/null && echo "no error"

which should print no error if everything is ok. - If you are sure the files have been copied correctly, you can delete the original ones to free space on the file system.

- Optional: remove the write-permissions from all the files and directories in $OFFSITE

$ chmod a-w project_xyz.tar.zst

for a single file or$ chmod -R a-w project_xyz/

for a full directory.

The main idea of $OFFSITE is that once a file or directory is copied there it will not be changed afterwards. Please note, that the data in $OFFSITE will be deleted sometime after your account has been deactivated.