Difference between revisions of "KNIME 2016"

| (25 intermediate revisions by the same user not shown) | |||

| Line 51: | Line 51: | ||

# Download the plugin [[media:Cluster-exec-slurm-20190807-directory.zip|[zip]]] | # Download the plugin [[media:Cluster-exec-slurm-20190807-directory.zip|[zip]]] | ||

# Unpack the zip-file on your local computer | # Unpack the zip-file on your local computer | ||

# Open KNIME and go to File-->Preferences | # Open KNIME and go to ''File''-->''Preferences'' | ||

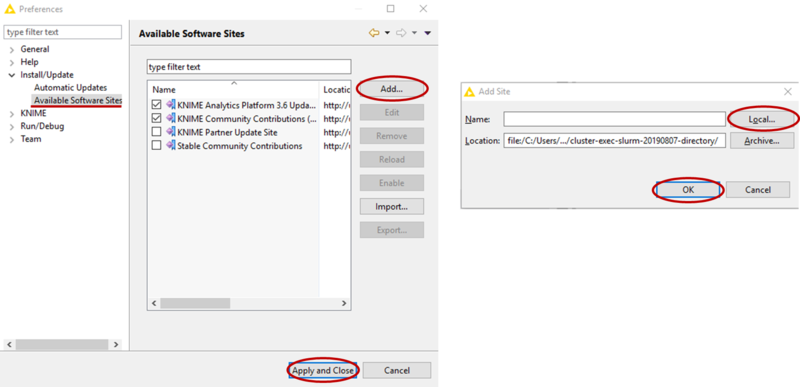

# Add the location of the directory form step 2 as a software site (see picture) | # Add the location of the directory form step 2 as a software site (see picture) | ||

#:[[Image:KNIME_SetupSCE_1.png|800px|Adding Software Site for Plugin]] | #:[[Image:KNIME_SetupSCE_1.png|800px|Adding Software Site for Plugin]] | ||

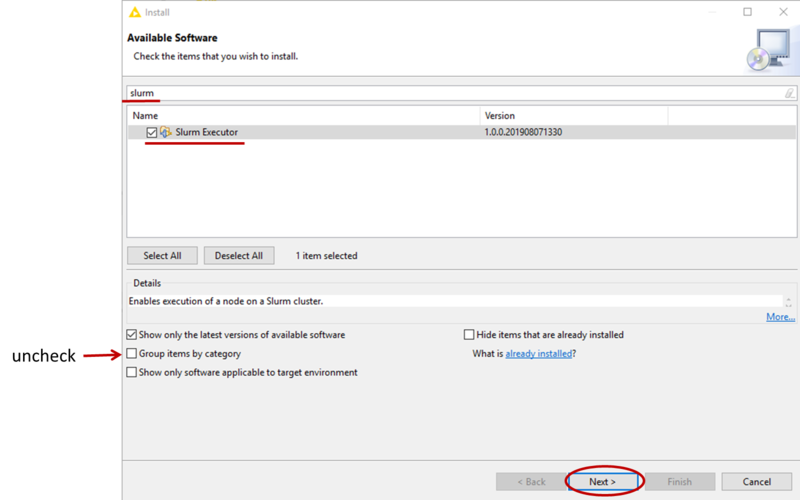

# Now go to File-->Install KNIME Extension, if needed uncheck | # Now go to ''File''-->''Install KNIME Extension'', if needed uncheck ''Group items by category'', then find the ''Slurm Executor'' and check it for installation (see picture). Then click ''Next'' and follow the instructions to install the extension (at the end, do not worry about the security warning and confirm the installation). | ||

#:[[Image: | #:[[Image:KNIME_SetupSCE_2.png|800px|Installing the Slurm Executor Plugin]] | ||

# After the installation of the plugin you need to restart KNIME (it will ask you to do so). | |||

==== Configuration of the Slurm Executor ==== | |||

<ol> | |||

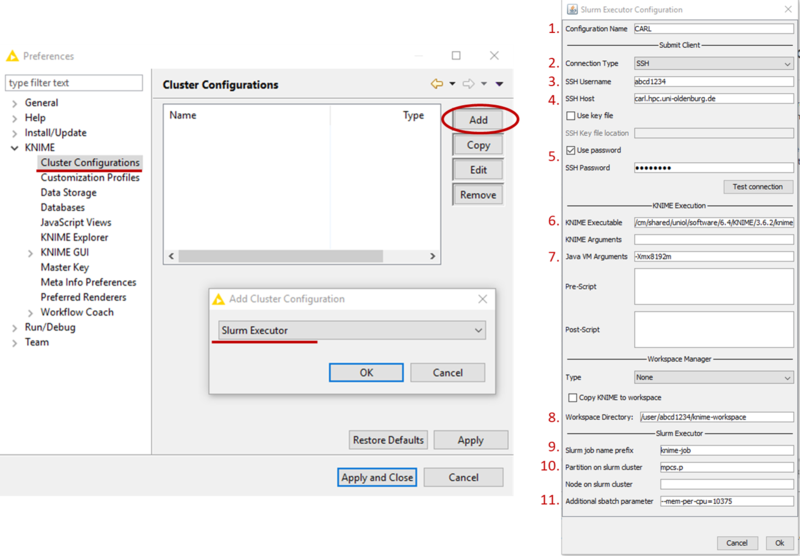

<li>Once you have installed the plugin and restarted KNIME, go to ''File''-->''Preferences'' again.</li> | |||

<li>Select the ''Cluster Configuration'' under ''KNIME'' and click ''Add'' (see picture below)</li> | |||

<li>From the drop-down list, select ''Slurm Executor'' and confirm with ''Ok'' (see picture below)</li> | |||

::[[Image:KNIME_SetupSCE_3.png|800px|Configuration of the Slurm Executor Plugin]] | |||

<li>Enter the following information in the appropriate fields (see picture above)</li> | |||

<ol> | |||

<li>'''Configuration Name''': CARL (or any other name you wish to use)</li> | |||

<li>'''Connection Type''': SSH</li> | |||

<li>'''SSH Username''': your university login (abcd1234)</li> | |||

<li>'''SSH Host''': <tt>carl.hpc.uni-oldenburg.de</tt></li> | |||

<li>'''SSH Password''': Check ''Use password'' and enter your university password, alternatively check ''Use key file'' and give location of the key file (e.g. <tt>/home/yourname/.ssh/id_rsa</tt></li> | |||

: At this point, you might want to check if you can connect to the cluster. If the test fails, you may have to install an ssh-client on your computer. Also check, if SSH2 is correctly configured in KNIME. For that, save your configuration so far, then go to ''File''-->''Preferences'' and find ''Network Connections'' under ''General''. In ''SSH2'', make sure that ''SSH2 home'' points to the right folder (typical <tt>.ssh</tt> in your home folder. Check if you can connect to the cluster with <tt>ssh</tt> from a shell/terminal. Once test of the connection to the cluster is successful, continue to edit the configuration of the Slurm Executor: | |||

<li>'''KNIME Executable''': <tt>/cm/shared/uniol/software/6.4/KNIME/3.6.2/knime</tt> (location of the executable on the cluster, you can find it after loading the module with | |||

<pre>$ which knime /cm/shared/uniol/software/6.4/KNIME/3.6.2/knime</pre> e.g. in case of a different version)</li> | |||

<li>'''Java VM Arguments''': it is recommended to set memory for JVW, e.g. with <tt>-Xmx8192m</tt> to 8GB</li> | |||

<li>'''Workspace Directory''': a directory on the cluster, e.g. <tt>/user/abcd1234/knime-workspace</tt>, KNIME will use this directory to store data for and during the execution of jobs on the cluster. If you expect a lot of I/O, please use <tt>/gss/work/abcd1234/knime-workspace</tt> for better performance.</li> | |||

<li>'''Slurm job name prefix''': should be a unique identifier for your KNIME jobs on the cluster, e.g. ''knime-job-abcd1234'' (if multiple jobs with the same name exist at the same time it can lead to problems)</li> | |||

<li>'''Partition on slurm cluster''': e.g. ''mpcs.p'', but you are free to choose here from the available partitions (here ''mpcs.p'' is a good choice because of the memory requirement)</li> | |||

<li>'''Additional sbatch parameter''': e.g. <tt>--mem-per-cpu=10375</tt> to allocated memory or other specific resources (time, GRES, ...)</li> | |||

</ol> | |||

<li>Confirm your configuration with ''Ok'' and ''Apply and Close''</li> | |||

</ol> | |||

==== Test with Example Case ==== | |||

Once the Slurm Executor plugin is installed and configured, you can test it with the following steps: | |||

# Download the Example case [[media:TestSLURMClusterExecution.zip|[zip]]] | |||

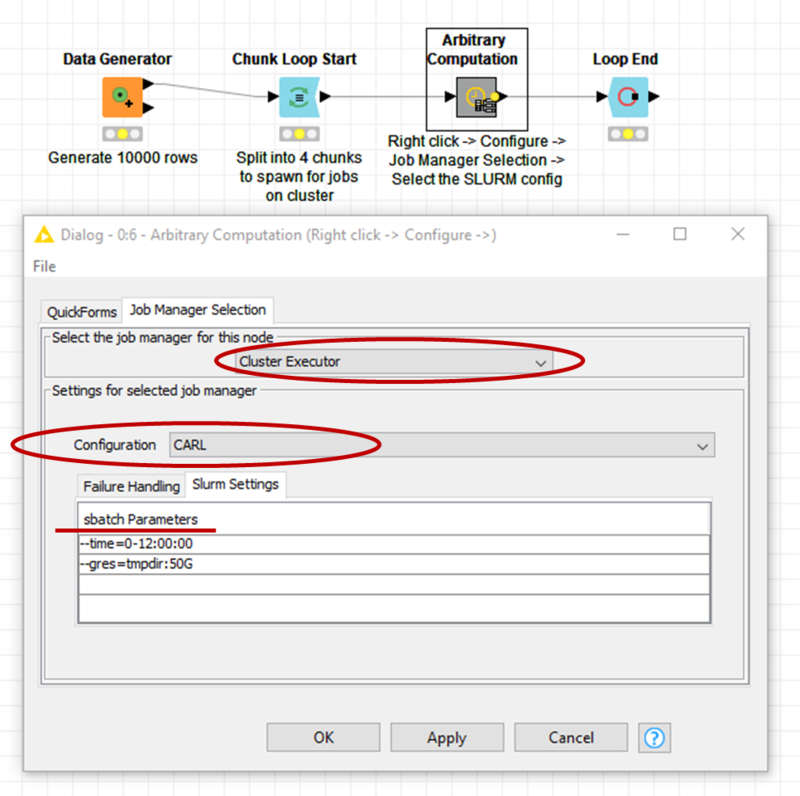

# Open KNIME and import the workflow from the <tt>zip</tt>-file (the workflow can be seen in the picture below) | |||

# Right-click on the node ''Arbitrary Computation'' and select ''Configure'', then select the ''Cluster Executor'' as job manager with the configuration ''CARL'' (or the name you have choosen) | |||

#:[[Image:KNIME_SetupSCE_4.png|800px|Example Workflow and Node Configuration]] | |||

# Add Slurm Settings as needed (see picture), e.g. to set the time limit or to request a GRES (you may have to close with ''Ok'' and reopen ''Configure'' before you see the Slurm settings) | |||

# Now you can execute the workflow or individual nodes as usual | |||

# To monitor your jobs on the cluster, you can right-click the node again and select ''Job Manager View'', alternatively login to the cluster and use Slurm commands directly | |||

If everything was setup correctly, the example workflow should submit four jobs which should complete within two minutes. | |||

==== Tips and Best Practices ==== | |||

* Use wrapped Metanodes: All nodes can be executed on the cluster but it is advised to encapsulate the computational-intensive part of the workflow in a wrapped metanode and configure this metanode to be executed on the cluster | |||

* Load the data on the cluster: If your workflow handles a lot of data it is best if you load the data from a storage accessible to the cluster inside the wrapped metanode. You can use SMB to mount e.g. <tt>$WORK</tt> to copy data to and from the cluster. | |||

* Pro tip: Use the nodes “Don’t Save Start” and “Don’t Save End” at the beginning and end of the workflow inside the wrapped metanode. This will reduce the amount of data which is downloaded from the cluster. (An option to save only the final result for wrapped metanodes is planned). | |||

== Documentation == | == Documentation == | ||

To find out more about ''KNIME Analytics Platform'', you can take a look at this [https://www.knime.com/knime-software/knime-analytics-platform overview].<br /> | To find out more about ''KNIME Analytics Platform'', you can take a look at this [https://www.knime.com/knime-software/knime-analytics-platform overview].<br /> | ||

The full documentation and more learning material can be found [https://www.knime.com/resources here]. | The full documentation and more learning material can be found [https://www.knime.com/resources here]. | ||

Latest revision as of 15:07, 8 August 2019

Introduction

KNIME Analytics Platform is the open source software for creating data science applications and services.

KNIME stands for KoNstanz Information MinEr.

Installed version

The currently installed version is available on the environment hpc-env/6.4:

KNIME/3.6.2

KNIME Module

If you want to find out more about KNIME on the HPC Cluster, you can use the command

module spider KNIME

This will show you basic informations e.g. a short description and the currently installed version.

To load the desired version of the module, use the command, e.g.

module load KNIME

Always remember: this command is case sensitive!

Using KNIME on the HPC Cluster

Basically, you have two options to use KNIME on the HPC cluster: 1) you can start KNIME within a job script and execute a prepared workflow or 2) you can use the SLURM Cluster Execution from your local work station to offload selected nodes from your workflow to the cluster. Both option are described briefly below.

Using KNIME with a job script

This approach is straight-forward once you have prepared a workflow for the execution on the cluster. That means you need to copy all the required files to a directory on the cluster (the worflowDir). After that you need to write a job script which calls KNIME and runs your workflow. A minimal example is

#!/bin/bash #SBATCH --partition carl.p knime -nosplash -application org.knime.product.KNIME_BATCH_APPLICATION -workflowDir="$HOME/knime-workspace/Example Workflows/Basic Examples/Simple Reporting Example"

The workflow in this example is available once you started the KNIME gui on the cluster (which is recommended to do once). Additional SLURM option may used to request memory, run time and other resources (see elsewhere in this wiki for details). Furthermore, there are also other option to run KNIME in batch mode, e.g. to request memory for the Java Virtual Machine. Please refer to the documentation of KNIME for details.

Using KNIME with the SLURM Cluster Execution Plugin

The SLURM Cluster Execution Plugin allows you to offload some nodes in your workflow to the cluster. To use the plugin you need to install and configure it first as described here. Please note, that the plugin is not officially supported by KNIME. It can be used as it is, however if you question please send them to Scientific Computing.

Prerequisites

You need to install the same version of KNIME locally that you want to use on the cluster (older versions might be ok, but newer version may fail). You also need to be able to connect to the cluster with ssh, optionally you can prepare an identity file for the login. It is also recommended to start the KNIME gui once on the cluster to create the default workflowDir in your HOME directory. Alternatively, you can create one manually.

Installing the Plugin

- Download the plugin [zip]

- Unpack the zip-file on your local computer

- Open KNIME and go to File-->Preferences

- Add the location of the directory form step 2 as a software site (see picture)

- Now go to File-->Install KNIME Extension, if needed uncheck Group items by category, then find the Slurm Executor and check it for installation (see picture). Then click Next and follow the instructions to install the extension (at the end, do not worry about the security warning and confirm the installation).

- After the installation of the plugin you need to restart KNIME (it will ask you to do so).

Configuration of the Slurm Executor

- Once you have installed the plugin and restarted KNIME, go to File-->Preferences again.

- Select the Cluster Configuration under KNIME and click Add (see picture below)

- From the drop-down list, select Slurm Executor and confirm with Ok (see picture below)

- Enter the following information in the appropriate fields (see picture above)

- Configuration Name: CARL (or any other name you wish to use)

- Connection Type: SSH

- SSH Username: your university login (abcd1234)

- SSH Host: carl.hpc.uni-oldenburg.de

- SSH Password: Check Use password and enter your university password, alternatively check Use key file and give location of the key file (e.g. /home/yourname/.ssh/id_rsa

- At this point, you might want to check if you can connect to the cluster. If the test fails, you may have to install an ssh-client on your computer. Also check, if SSH2 is correctly configured in KNIME. For that, save your configuration so far, then go to File-->Preferences and find Network Connections under General. In SSH2, make sure that SSH2 home points to the right folder (typical .ssh in your home folder. Check if you can connect to the cluster with ssh from a shell/terminal. Once test of the connection to the cluster is successful, continue to edit the configuration of the Slurm Executor:

- KNIME Executable: /cm/shared/uniol/software/6.4/KNIME/3.6.2/knime (location of the executable on the cluster, you can find it after loading the module with

$ which knime /cm/shared/uniol/software/6.4/KNIME/3.6.2/knime

e.g. in case of a different version) - Java VM Arguments: it is recommended to set memory for JVW, e.g. with -Xmx8192m to 8GB

- Workspace Directory: a directory on the cluster, e.g. /user/abcd1234/knime-workspace, KNIME will use this directory to store data for and during the execution of jobs on the cluster. If you expect a lot of I/O, please use /gss/work/abcd1234/knime-workspace for better performance.

- Slurm job name prefix: should be a unique identifier for your KNIME jobs on the cluster, e.g. knime-job-abcd1234 (if multiple jobs with the same name exist at the same time it can lead to problems)

- Partition on slurm cluster: e.g. mpcs.p, but you are free to choose here from the available partitions (here mpcs.p is a good choice because of the memory requirement)

- Additional sbatch parameter: e.g. --mem-per-cpu=10375 to allocated memory or other specific resources (time, GRES, ...)

- Confirm your configuration with Ok and Apply and Close

Test with Example Case

Once the Slurm Executor plugin is installed and configured, you can test it with the following steps:

- Download the Example case [zip]

- Open KNIME and import the workflow from the zip-file (the workflow can be seen in the picture below)

- Right-click on the node Arbitrary Computation and select Configure, then select the Cluster Executor as job manager with the configuration CARL (or the name you have choosen)

- Add Slurm Settings as needed (see picture), e.g. to set the time limit or to request a GRES (you may have to close with Ok and reopen Configure before you see the Slurm settings)

- Now you can execute the workflow or individual nodes as usual

- To monitor your jobs on the cluster, you can right-click the node again and select Job Manager View, alternatively login to the cluster and use Slurm commands directly

If everything was setup correctly, the example workflow should submit four jobs which should complete within two minutes.

Tips and Best Practices

- Use wrapped Metanodes: All nodes can be executed on the cluster but it is advised to encapsulate the computational-intensive part of the workflow in a wrapped metanode and configure this metanode to be executed on the cluster

- Load the data on the cluster: If your workflow handles a lot of data it is best if you load the data from a storage accessible to the cluster inside the wrapped metanode. You can use SMB to mount e.g. $WORK to copy data to and from the cluster.

- Pro tip: Use the nodes “Don’t Save Start” and “Don’t Save End” at the beginning and end of the workflow inside the wrapped metanode. This will reduce the amount of data which is downloaded from the cluster. (An option to save only the final result for wrapped metanodes is planned).

Documentation

To find out more about KNIME Analytics Platform, you can take a look at this overview.

The full documentation and more learning material can be found here.