Difference between revisions of "File system and Data Management"

| Line 117: | Line 117: | ||

<span style="background:yellow">drwx--S---</span> | <span style="background:yellow">drwx--S---</span> | ||

*the first letter marks the filetype, in this case its a "'''d'''" which means we are looking at an '''directory'''. | *the first letter marks the filetype, in this case its a "'''d'''" which means we are looking at an '''directory'''. | ||

*the following chars are the access rights: "rwx--S---" means: Only the owner can | *the following chars are the access rights: "'''rwx'''--S---" means: Only the owner can '''r'''ead, '''w'''rite and '''e'''xecute the folder and everything thats in it. | ||

*The "S" stands for "Set Group ID". This will, additional to the rights of the executing (read and write respectively) User, apply the rights of the group which owns the filed/directory etc. '''This was an temporary option to ensure safety while we were opening the cluster for the public. Its possible that the "S" isnt set when you are looking at this guide, that is okay and intended.''' | |||

<span style="background:red">abgu0243</span> | <span style="background:red">abgu0243</span> | ||

Revision as of 08:56, 6 February 2017

The HPC cluster offers access to two important file systems. In additions the compute nodes of the HERO-II cluster have local storage devices for high I/O demands. The different file systems available are documented below. Please follow the guidelines given for best pratice.

Storage Hardware

A GPFS Storage Server (GSS) serves as a parallel file system for the HPC cluster. The total (net) capacity of this file system is about 900 TB and the read/write performance is up to 17/12 GB/s over FDR Infiniband. It is possible to mount the GPFS on your local machine using SMB/NFS (via the 10GE campus network). The GPFS should be used as the primary storage device for HPC, in particular for data that is read/written by the compute nodes. Currently, the GPFS offers no backup functionality (i.e. deleted data can not be recovered).

The central storage system of IT services is used to provide the NFS-mounted $HOME-directories. The central storage system offers very high availability, snapshots, backup and should be used permanent storage, in particular everything that cannot be recovered easily (program codes, initial conditions, final results, ...).

File Systems

The following file systems are available on the cluster

| File System | Environment Variable | Path | Device | Data Transfer | Backup | Used for | Comments |

|---|---|---|---|---|---|---|---|

| Home | $HOME | /user/abcd1234 | Isilon Storage System | NFS over 10GE | yes | critical data that cannot easily be reproduced (program codes, initial conditions, results from data analysis) | high-availability file-system, snapshot functionality, can be mounted on local workstation |

| Data | $DATA | /data/abcd1234 | GPFS | FDR Infiniband | not yet | important data from simulations for on-going analysis and long term (project duration) storage | parallel file-system for fast read/write access from compute nodes, can be mounted on local workstation |

| Work | $WORK | /work/abcd1234 | GPFS | FDR Infiniband | no | data storage for simulation runtime, pre- and post-processing, short term (weeks) storage | parallel file-system for fast read/write access from compute nodes, temporarily store larger amounts of data |

| Scratch | $TMPDIR | /scratch/<job-specific-dir> | local disk or SSD | local | no | temporary data storage during job runtime | directory is created at job startup and deleted at completion, job script can copy data to other file systems if needed |

Quotas

Quotas are used to limit the storage capacity for each user on each file system (except $TMPDIR which is not persistent after job completion). The following table gives an overview of the default quotas. Users with a particular high demand in storage can contact Scientific Computing to have their quotas increased (reasonably and depending available space).

| File System | Hard Quota | Soft Quota | Grace Period |

|---|---|---|---|

| $HOME | 1 TB | none | none |

| $DATA | 2 TB | none | none |

| $WORK | 10 TB | 2 TB | 30 days |

The $WORK file system is special regarding quotas. It uses a soft limit and a hard limit whereas all other file systems only have a hard limit.

A hard limit means, that you cannot write more data to the file system than allowed by your personal quota. Once the quota has been reached any write operation will fail (including those from running simulations).

A soft limit, on the other hand, will only trigger a grace period during which you can still write data to the file system (up to the hard limit). Only when the grace period is over you can no longer write data, again including from running simulations (also note that you cannot write data even if you below the hard but above the soft limit).

What does this mean for $WORK? You can store data on $WORK for as long as you want while you are below the soft limit. When you get above the soft limit (e.g. during a long, high I/O simulation) the grace period starts. You can still produce more data within the grace period and below the hard limit. Once you delete enough of your data on $WORK to get below the soft limit the grace period is reset. This system forces you to clean up data no longer needed on a regular basis and helps to keep the GPFS storage system usable for everyone.

Managing access rights of your folders

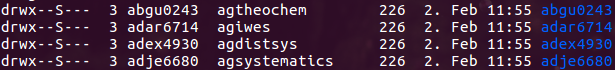

Different to the directory structure on HERO and FLOW, the folder hierarchy on CARL and EDDY is flat and less clustered together. We no longer have multiple directories stacked in each other. This leads to an inevitable change in the access right management. If you dont change the access rights for your directory on the cluster, the command "ls -l /user" will show you something like this:

If you look at the first line shown in the screenshot above, you can see the following informations:

drwx--S--- 3 abgu0243 agtheochem 226 2. Feb 11:55 abgu0243

drwx--S---

- the first letter marks the filetype, in this case its a "d" which means we are looking at an directory.

- the following chars are the access rights: "rwx--S---" means: Only the owner can read, write and execute the folder and everything thats in it.

- The "S" stands for "Set Group ID". This will, additional to the rights of the executing (read and write respectively) User, apply the rights of the group which owns the filed/directory etc. This was an temporary option to ensure safety while we were opening the cluster for the public. Its possible that the "S" isnt set when you are looking at this guide, that is okay and intended.

abgu0243

- current owner of the file/directory

agtheochem

- current group of the file/directory

abgu0243

- current name of the file/directory

Basically we will need three commands to modify our access rights on the cluster:

- chmod

- chgrp

- chown

Storage Systems Best Practice

Here are some general remarks on using the storage systems efficiently:

- Store all files and data that cannot be reproduced (easily) on $HOME for highest protection.

- Carefully select files and data for storage on $HOME and compress them if possible as storage on $HOME is the most expensive.

- Store large data files that can be reproduced with some effort (re-running a simulation) on $DATA.

- Data created or used during the run-time of a simulation should be stored in $WORK and avoid reading from or writing to $HOME, in particular if you are dealing with large data files.

- If possible avoid writing many small files to any file system.

- Clean up your data regularly.