Difference between revisions of "Audio Data Processing"

(Created page with "= Audio Data Processing = '''A Minimal example to run audio data processing on the HERO cluster''' ''Authors: Hendrik Kayser and Tim Jürgens, Medical Physics, July 2014.'' =...") |

|||

| Line 19: | Line 19: | ||

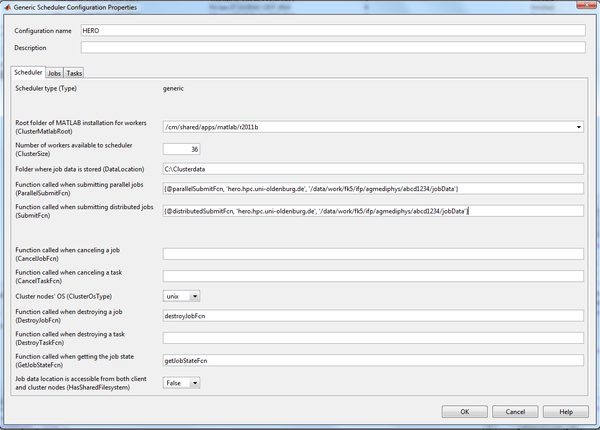

You should be able to login to HERO from your local machine and your local MATLAB should be configured according to [[MATLAB Distributing Computing Server]]. Your local configuration settings in Matlab could look like this under Windows: | You should be able to login to HERO from your local machine and your local MATLAB should be configured according to [[MATLAB Distributing Computing Server]]. Your local configuration settings in Matlab could look like this under Windows: | ||

[[Image:HERO_MATLAB_WINCONFIG.png|600px|Configuration example]] | |||

The minimal example outlined in the HERO-Wiki in subsection [[MATLAB Distributing Computing Server]] (parameter sweep in an ordinary | The minimal example outlined in the HERO-Wiki in subsection [[MATLAB Distributing Computing Server]] (parameter sweep in an ordinary | ||

Revision as of 17:53, 7 October 2014

Audio Data Processing

A Minimal example to run audio data processing on the HERO cluster Authors: Hendrik Kayser and Tim Jürgens, Medical Physics, July 2014.

Introduction

This is a short tutorial that will enable you to do the steps to get a typical Matlab data processing (e.g. modeling, signal processing) task done on the HERO computer cluster using the Matlab distributed computing server (MDCS). The tutorial features a minimal example that should contain the most important steps, which are:

- A loop over “external” parameters (such as SNR), this will be the parallelized loop (parfor-Loop)

- Loading audio data from a folder on the cluster (e.g., WAV-, MAT-files)

- Processing audio data (e.g., resampling or amplifying)

- Using MATLAB’s built-in functions or toolbox functions (e.g., the signal processing toolbox)

- Using own MATLAB-functions that are stored on the cluster

- Store the processed data on the cluster (e.g., speech features)

To let run such a task in parallel on HERO, there might be some pitfalls that are not always obvious when you start to implement this. This tutorial should avoid at least most of them. However, feedback on specific problems that you encountered is always welcome and should be referred to Tim Jürgens.

Preliminary requirements

You should be able to login to HERO from your local machine and your local MATLAB should be configured according to MATLAB Distributing Computing Server. Your local configuration settings in Matlab could look like this under Windows:

The minimal example outlined in the HERO-Wiki in subsection MATLAB Distributing Computing Server (parameter sweep in an ordinary differential equation problem) should run and finish successfully on HERO.

Installing and Testing locally

First, you can test the framework locally without parallel processing. To do so, unzip the files in “dataProcessingMDCS.zip” and open “batchManipulateAudio.m” using MATLAB. Comment out line 7 (the ”cd” command) and run the script. The run is finished successfully if 8 new files have been stored in the subfolder “results”. For the subsequent steps, please delete these 8 files again.

Next, you can test of the framework locally with parallel processing. Type into the command line “matlabpool local 2”. This opens two workers locally on your PC (requires processor with at least two cores). Let the script “batchManipulateAudio.m” run again. Now the calculation is done in parallel on the two cores. Again, the run is finished successfully if 8 new files have been stored in the subfolder “results”. For the subsequent steps, please delete these 8 files again.

Transfer of the framework to the cluster

Uncomment line 7 of “batchManipulateAudio.m” and put in here your path on the cluster where the data will be found. Our suggestion is to use your folder in the “work” directory-part of the cluster, as we will load and process and store audio files (which may need a lot of hard drive space). This folder may be for instance /data/work/fk5/ifp/agmediphys/abcd1234/. The “work”-directory part offers more hard drive space than, e.g., the “user”-directory (/user/fk5/ifp/agmediphys/abcd1234/). However the caveat is that the work-directory is not being back upped, for details see File system.

Connect to the cluster storage using the “Map network drive” option under Windows or “mount” under Linux, see Mounting Directories of FLOW and HERO. Alternatively scp or an scp client (e.g. WINSCP under Windows) can be used. Copy all the files of the package to your directory. Make sure it is the same directory as specified in line 7 of “batchManipulateAudio.m”.

Test the framework on the cluster

Type

sched = findResource('scheduler', 'Configuration', 'HERO');

into the command line in order to get resources from the cluster. Then type

job = batch(sched, 'batchManipulateAudio', 'matlabpool', 1);

for the execution of the script in parallel on the cluster using 2 workers. Also here, the run is finished successfully if 8 new files have been stored in the subfolder “results”. After finishing the job, you can get status messages etc. using the command

jobData=load(job);

This will also load the variables used *outside* the parfor loop in the “batchManipulateAudio.m” script. Furthermore, error messages are also stored in this variable.

Nice to know

After typing the command that starts the job on the cluster (“job = batch…”) the command line outputs how much runtime, memory, and disk space is reserved for the job. If you don't specify anything, the default values of

runtime = 24:0:0 memory = 1500M diskspace = 50G

will be used.

If you want to specify different values, follow the instructions on the MDCS wiki page MATLAB Distributing Computing Server#Advanced usage: Specifying resources. A common case is that the default memory of 1500M is not enough. You may therefore need to specify, e.g., 2 to 4 GB of memory. Note that this needs to be done before each new job submission.

The “job Monitor” in Matlab (Parallel > Job Monitor) shows you all your finished/queued/running jobs. If you are using a Matlab version not featuring the job monitor, you can check the status of your job by job.state.

The HERO wiki offers a great resource of much more information about the cluster and how to use it. See, e.g., Brief Introduction to HPC Computing#Checking the status of the job

Information on how to use (and account for restrictions of) parfor-loops is found here: http://blogs.mathworks.com/loren/2009/10/02/using-parfor-loops-getting-up-and-running/

Debugging in parfor-loops is not possible when they are executed in parallel. A reasonable way forward to implement your scripts (similar to this minimal example) is therefore to first test your scripts local on your PC with MDCS (with opening a local matlab pool) and only if that works going on the cluster.